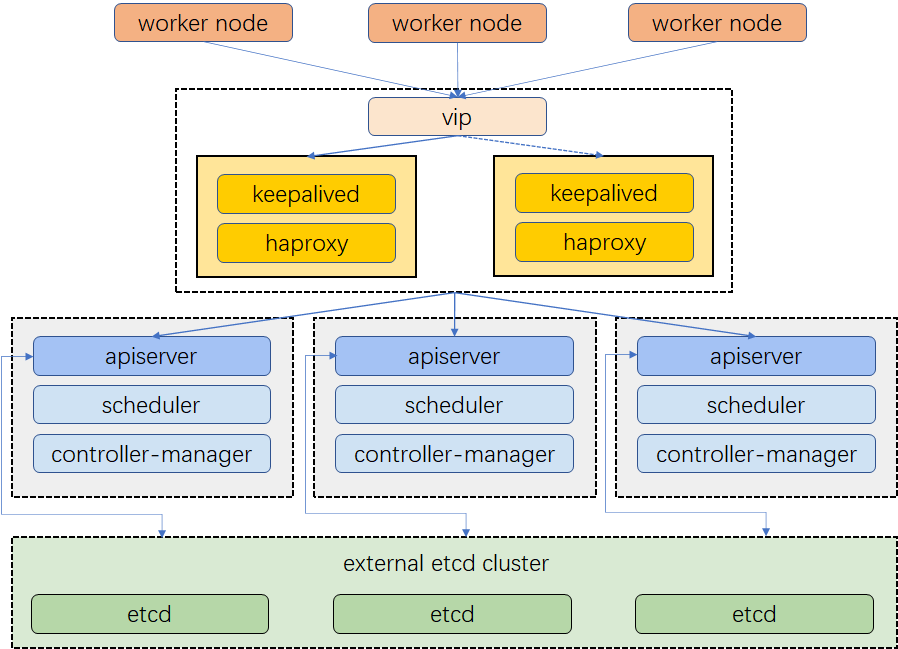

本文主要介绍使用keepalived + haproxy对kube-apiserver进行负载均衡,实现高可用kubernetes集群。

- 部署架构

- 集群规划(示例集群操作系统为EulerOS v2.5)

| ip | 角色 |

|---|

| 192.168.0.100 | vip |

| 192.168.0.61 | lb(keepalived + haproxy) |

| 192.168.0.62 | lb(keepalived + haproxy) |

| 192.168.0.81 | master |

| 192.168.0.82 | master |

| 192.168.0.83 | master |

| 192.168.0.84 | worker |

| 192.168.0.85 | worker |

| 192.168.0.86 | worker |

- 部署keepalived+haproxy

yum install keepalived haproxy psmisc -y

- 配置haproxy (两台lb机器配置一致即可,注意替换后端服务地址)

global

log /dev/log local0 warning

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

log global

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

frontend kube-apiserver

bind *:6443

mode tcp

option tcplog

default_backend kube-apiserver

backend kube-apiserver

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kube-apiserver-1 192.168.0.81:6443 check

server kube-apiserver-2 192.168.0.82:6443 check

server kube-apiserver-3 192.168.0.83:6443 check

- 启动haproxy,并设置开机自启动

systemctl restart haproxy

systemctl enable haproxy

- 配置keepalived (注释的地方,根据实际环境填写,每台机器配置不同)

global_defs {

notification_email {

}

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance haproxy-vip {

state BACKUP

priority 100

interface eth0 #实例绑定的网卡

virtual_router_id 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 192.168.0.61 #当前机器地址

unicast_peer {

192.168.0.62 #peer中其它机器地址

}

virtual_ipaddress {

192.168.0.100/24 #vip地址

}

track_script {

chk_haproxy

}

}

- 启动keepalived,设置开机自启动

systemctl restart keepalived

systemctl enable keepalived

验证keepalived + haproxy 可用性

(1) 使用ip a s查看各lb节点vip绑定情况

(2) 暂停vip所在节点haproxy:systemctl stop haproxy

(3) 再次使用ip a s查看各lb节点vip绑定情况,查看vip是否发生漂移

使用kubekey部署k8s集群

# 创建配置文件

./kk create config --with-kubesphere #创建包含kubesphere的配置文件

- 填写配置文件 (根据机器实际情况填写相关信息, controlPlaneEndpoint.address填入vip地址)

# config-example.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: config-sample

spec:

hosts:

- {name: node1, address: 192.168.0.81, internalAddress: 192.168.0.81, password: EulerOS@2.5}

- {name: node2, address: 192.168.0.82, internalAddress: 192.168.0.82, password: EulerOS@2.5}

- {name: node3, address: 192.168.0.83, internalAddress: 192.168.0.83, password: EulerOS@2.5}

- {name: node4, address: 192.168.0.84, internalAddress: 192.168.0.84, password: EulerOS@2.5}

- {name: node5, address: 192.168.0.85, internalAddress: 192.168.0.85, password: EulerOS@2.5}

- {name: node6, address: 192.168.0.86, internalAddress: 192.168.0.86, password: EulerOS@2.5}

roleGroups:

etcd:

- node[1:3]

master:

- node[1:3]

worker:

- node[4:6]

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: 192.168.0.100 # vip

port: 6443

kubernetes:

version: v1.17.6

imageRepo: kubesphere

clusterName: cluster.local

network:

plugin: calico

kube_pods_cidr: 10.233.64.0/18

kube_service_cidr: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

storage:

defaultStorageClass: localVolume

localVolume:

storageClassName: local

---

apiVersion: v1

data:

ks-config.yaml: |

---

local_registry: ""

persistence:

storageClass: ""

etcd:

monitoring: true

endpointIps: 192.168.0.7,192.168.0.8,192.168.0.9

port: 2379

tlsEnable: true

common:

mysqlVolumeSize: 20Gi

minioVolumeSize: 20Gi

etcdVolumeSize: 20Gi

openldapVolumeSize: 2Gi

redisVolumSize: 2Gi

console:

enableMultiLogin: False # enable/disable multi login

port: 30880

monitoring:

prometheusReplicas: 1

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

grafana:

enabled: false

notification:

enabled: false

logging:

enabled: false

elasticsearchMasterReplicas: 1

elasticsearchDataReplicas: 1

logsidecarReplicas: 2

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

containersLogMountedPath: ""

kibana:

enabled: false

events:

enabled: false

auditing:

enabled: false

openpitrix:

enabled: false

devops:

enabled: false

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

sonarqube:

enabled: false

postgresqlVolumeSize: 8Gi

servicemesh:

enabled: false

notification:

enabled: false

alerting:

enabled: false

metrics_server:

enabled: false

weave_scope:

enabled: false

kind: ConfigMap

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.0.0

- 执行部署

./kk create cluster -f config-example.yaml

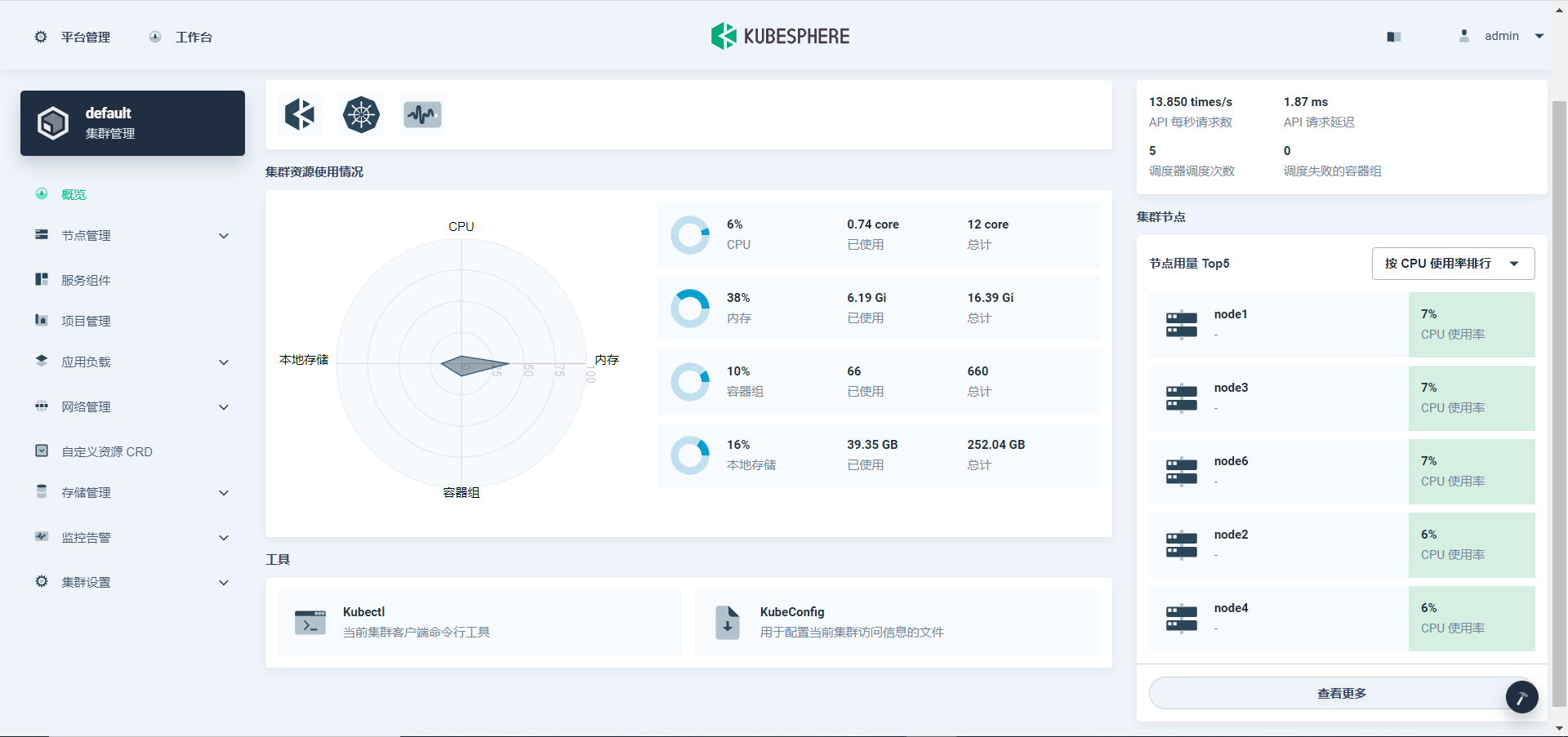

- 等待部署完成,登录kubesphere界面