KubeSphere3.0的Istio版本是1.4.8,Istio从1.5版本开始,把内部组件全部合并成了Istiod,版本变化很大。

下面提供手动热更新升级的方法,升级至1.6.10,该升级方法具有以下特点:

用户业务不受影响:升级后会安装新旧两个版本(1.4.8及1.6.10),业务Pod使用旧版本Istio不变。如果要使用新版本需要重启业务容器。为了减少业务流量影响,可以不重启;或在访问流量较小时重启。

数据自动同步:微服务治理/灰度发布策略使用crd保存数据,不受影响,更新至新版本后,策略会自动同步。

升级前步骤

- 确认已经开启了Istio组件

# helm -n istio-system list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

istio istio-system 1 2020-10-19 15:03:04.554414064 +0800 CST deployed istio-1.4.8 1.4.8

istio-init istio-system 2 2020-10-19 15:02:58.123980442 +0800 CST deployed istio-init-1.4.8 1.4.8

- 部署BookInfo应用示例

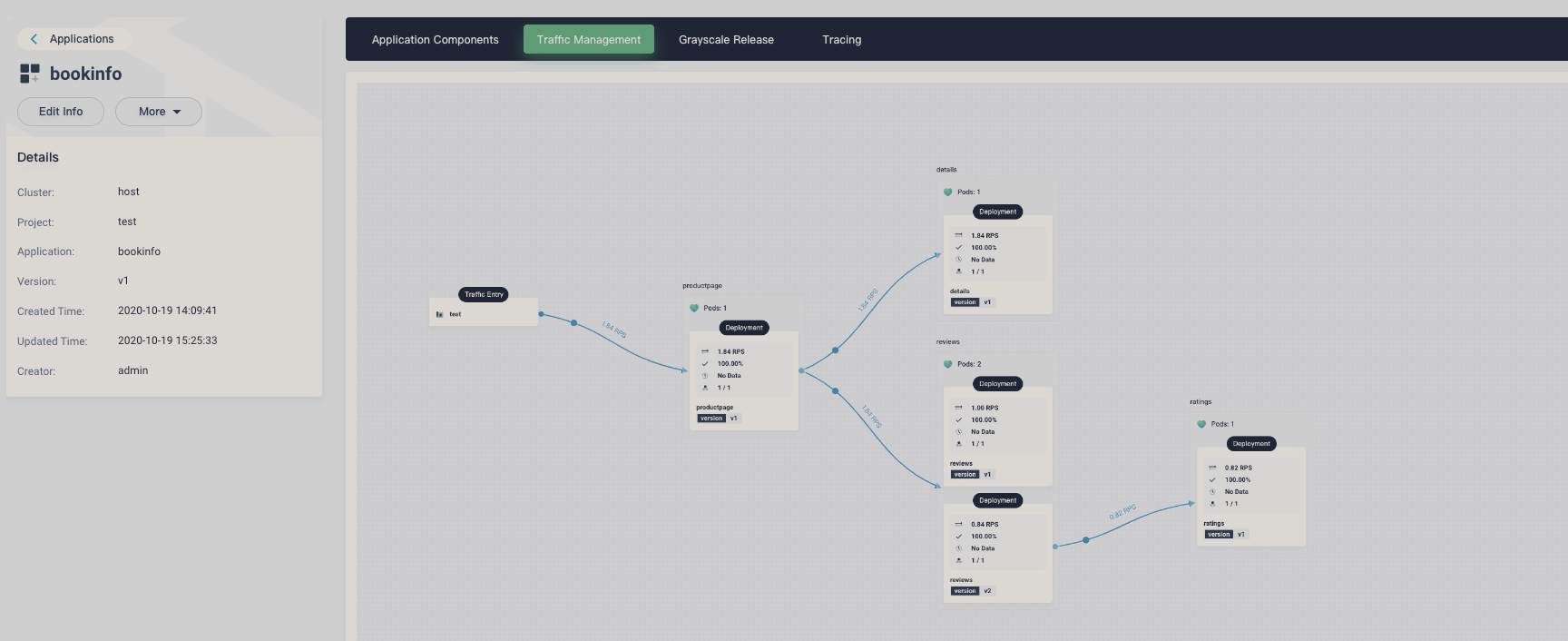

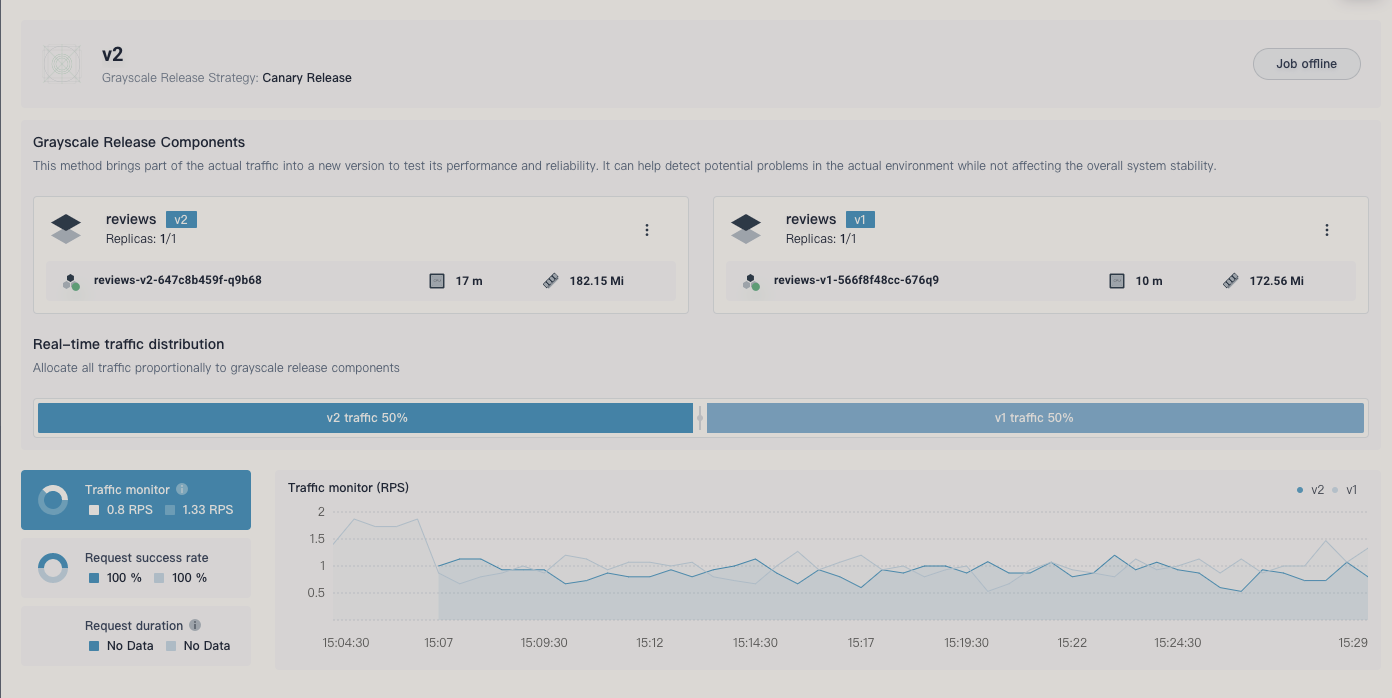

持续访问服务,引入流量,看到应用治理拓扑图确保正常,并灰度发布一个版本,以便对比升级后数据正常:

下载安装包

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.6.10 sh -

禁用 istio-galley

在安装新版本之前需要先禁用galley,否则新版本API无法检验通过,导致无法安装:

# 在command后面加入参数 - --enable-validation=false

kubectl edit deployment -n istio-system istio-galley

确认galley已经禁止

# kubectl get validatingwebhookconfigurations.admissionregistration.k8s.io istio-galley

Error from server (NotFound): validatingwebhookconfigurations.admissionregistration.k8s.io "istio-galley" not found

安装

设置新版本1-6-10,如Istiod-1-6-10

./istio-1.6.10/bin/istioctl install --set hub=istio --set tag=1.6.10 --set addonComponents.prometheus.enabled=false --set values.global.jwtPolicy=first-party-jwt --set values.global.proxy.autoInject=disabled --set values.global.tracer.zipkin.address="jaeger-collector.istio-system.svc:9411" --set values.sidecarInjectorWebhook.enableNamespacesByDefault=true --set values.global.imagePullPolicy=IfNotPresent --set values.global.controlPlaneSecurityEnabled=false --set revision=1-6-10

等待安装成功

Detected that your cluster does not support third party JWT authentication. Falling back to less secure first party JWT. See https://istio.io/docs/ops/best-practices/security/#configure-third-party-service-account-tokens for details.

✔ Istio core installed

✔ Istiod installed

- Pruning removed resources 2020-10-10T08:36:44.032602Z warn installer retrieving resources to prune type security.istio.io/v1beta1, Kind=PeerAuthentication: peerauthentications.security.istio.io is forbidden: User "system:serviceaccount:kubesphere-system:ks-installer" cannot list resource "peerauthentications" in API group "security.istio.io" at the cluster scope not found

✔ Installation complete

当提示 Installation complete时表明已经安装成功。

确认安装成功

上述命令执行完后,会存在两个版本的Istio,即Istio-1.4.8及 Istio-1.6.10

# kubectl -n istio-system get po

NAME READY STATUS RESTARTS AGE

istio-citadel-7f676f76d7-qtsnf 1/1 Running 0 21m

istio-galley-7b5ffd58fd-wmtsd 1/1 Running 0 8m21s

istio-ingressgateway-8569f8dcb-j8x4l 1/1 Running 0 21m

istio-init-crd-10-1.4.8-lkc8s 0/1 Completed 0 21m

istio-init-crd-11-1.4.8-6qdq9 0/1 Completed 0 21m

istio-init-crd-12-1.4.8-b5nvf 0/1 Completed 0 21m

istio-init-crd-14-1.4.8-wpvbj 0/1 Completed 0 21m

istio-pilot-67fd55d974-xdxhw 2/2 Running 0 21m

istio-policy-668894cffc-hkc9x 2/2 Running 0 21m

istio-sidecar-injector-9c4d79658-dtkrt 1/1 Running 0 21m

istio-telemetry-57fc886bf8-rcswr 2/2 Running 0 21m

istiod-1-6-10-7db56f875b-hwwtk 1/1 Running 0 8m1s

jaeger-collector-76bf54b467-zfz9v 1/1 Running 16 41d

jaeger-es-index-cleaner-1603036500-zmdpt 0/1 Completed 0 15h

jaeger-operator-7559f9d455-gl4tj 1/1 Running 2 41d

jaeger-query-b478c5655-gjh7s 2/2 Running 17 41d

istiod-1-6-10-7db56f875b-hwwtk 即为新版本

查看Injector

# kubectl get mutatingwebhookconfigurations istio-sidecar-injector-1-6-10

NAME CREATED AT

istio-sidecar-injector-1-6-10 2020-10-19T07:16:11Z

# kubectl get mutatingwebhookconfigurations istio-sidecar-injector

NAME CREATED AT

istio-sidecar-injector 2020-10-19T07:03:05Z

可以看到存在两个Injector,可以并存,默认使用新版本的Injector。

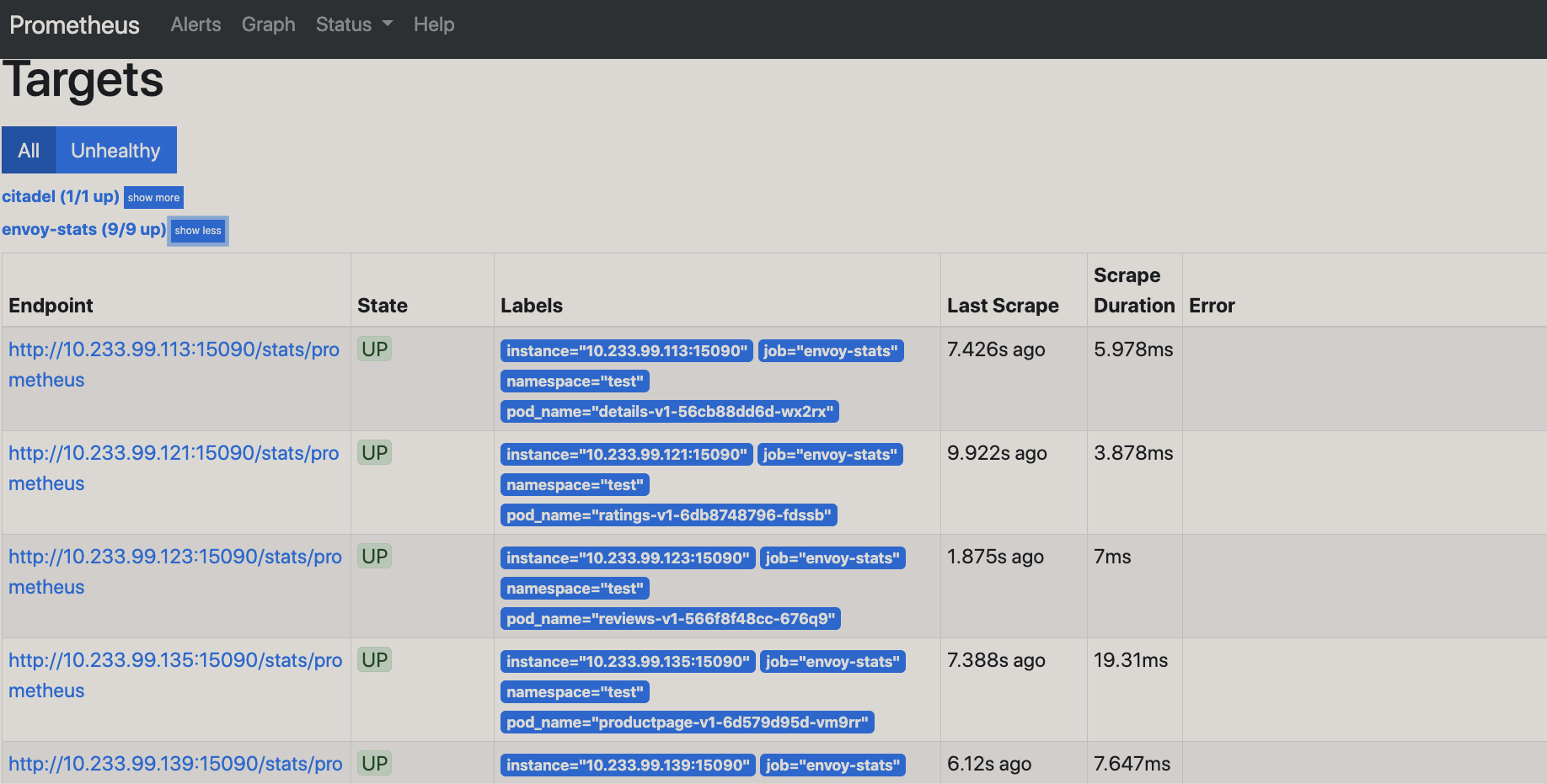

更新Prometheus 配置文件

流量治理拓扑图本质是通过Promtheus的数据来绘制流量图,需要更新Prometheus配置文件

新版本的Promtheus配置文件,同时兼容1.4.8及1.6.10两个版本

删除老的配置文件

kubectl -n kubesphere-monitoring-system delete secret additional-scrape-configs

更新prometheus配置文件

curl -O https://raw.githubusercontent.com/zackzhangkai/ks-installer/master/roles/ks-istio/files/prometheus/prometheus-additional.yaml

kubectl -n kubesphere-monitoring-system create secret generic additional-scrape-configs --from-file=prometheus-additional.yaml

暴露Prometheus服务,在线查看配置是否生效:

# kubectl -n kubesphere-monitoring-system get svc prometheus-k8s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus-k8s NodePort 10.233.51.22 <none> 9090:31034/TCP 70d

浏览器访问 http://IP:NodePort

检查envoy_stats是否正常,新版本的会此项配置,确认正常。

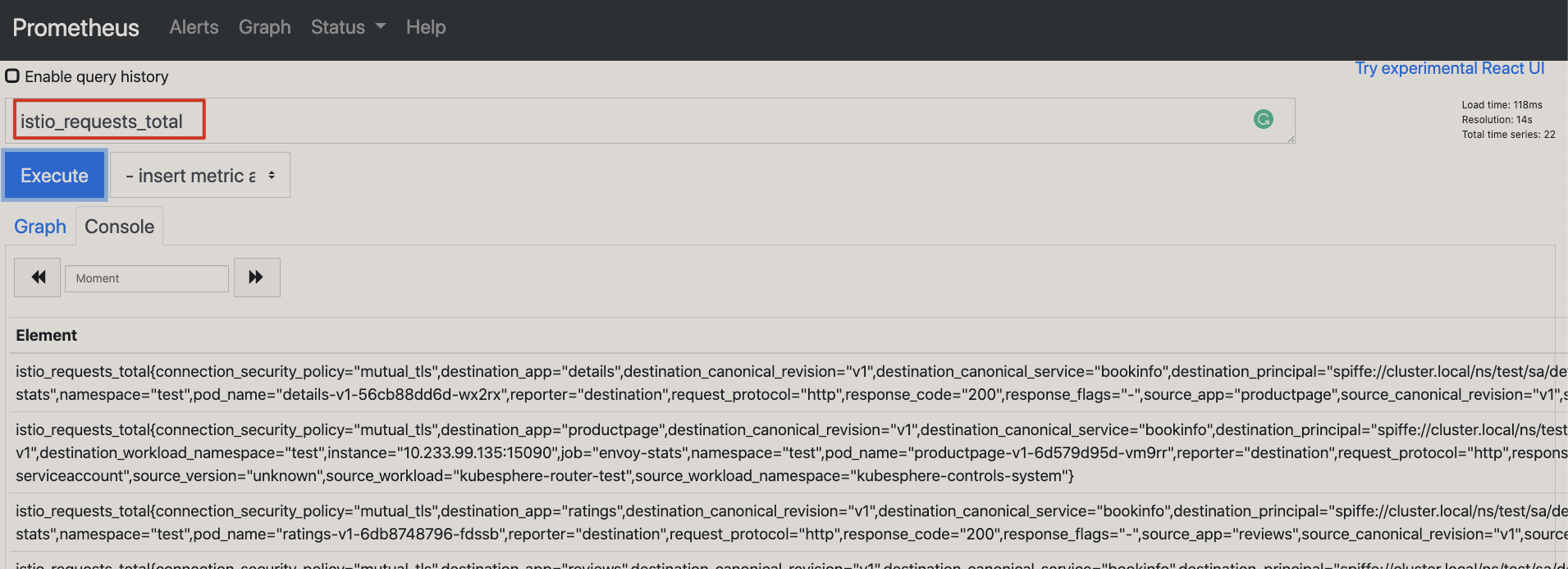

检查istio_requests_total 是否正常:

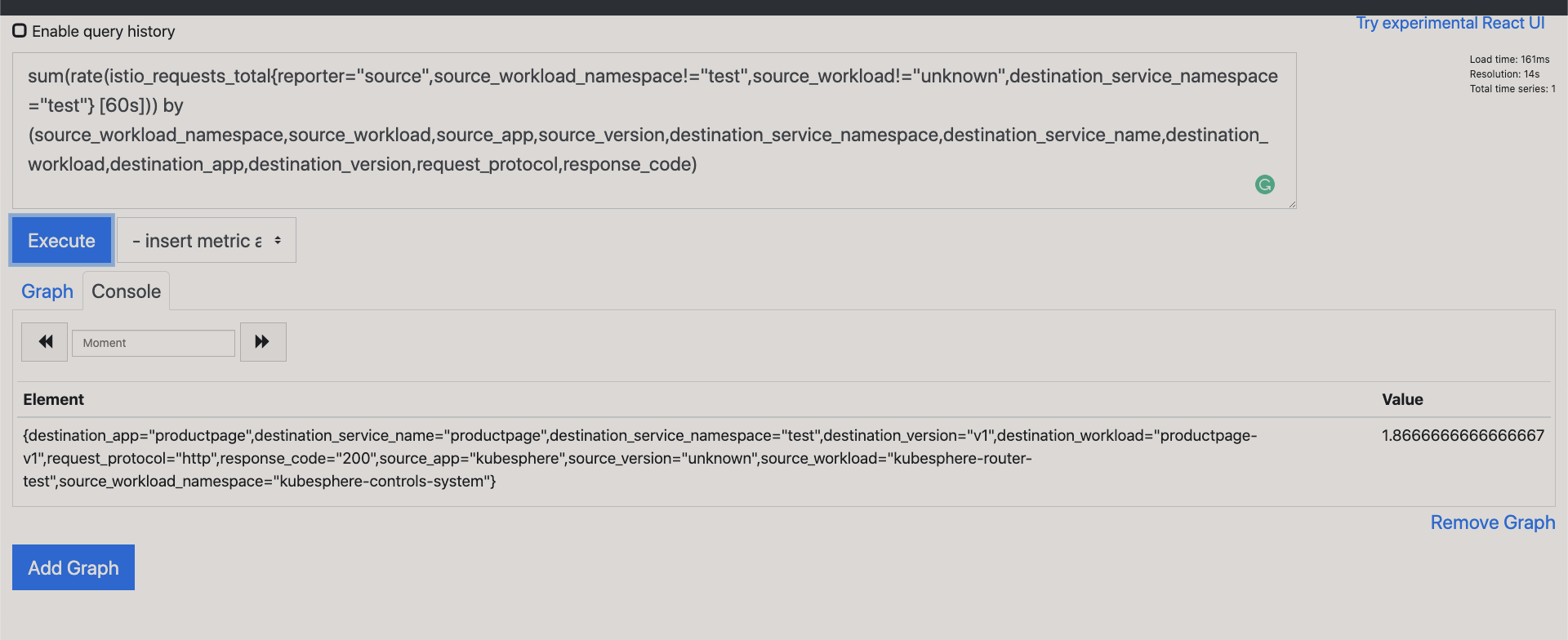

页面流量拓扑执行的PromQl命令为:

sum(rate(istio_requests_total{reporter="source",source_workload_namespace!="test",source_workload!="unknown",destination_service_namespace="test"} [60s])) by (source_workload_namespace,source_workload,source_app,source_version,destination_service_namespace,destination_service_name,destination_workload,destination_app,destination_version,request_protocol,response_code)

可以看到有数据,此时说明正常。

数据平面升级

业务目前还是使用的是Istio-1.4.8:

# kubectl -n test get po -oyaml | grep 'image: '

- image: istio/proxyv2:1.4.8

- image: kubesphere/examples-bookinfo-reviews-v2:1.13.0

image: istio/proxyv2:1.4.8

....

此时使用的是旧版本的Istio,由于业务没有重启,也不会影响业务。

新注册的服务,会使用新版本。

如果业务需要使用新版本的Istio,需要重启业务Pod,如这里重启productpage-v1:

kubectl -n test rollout restart deploy productpage-v1

如果启用了项目服务治理Tracing的功能,同样需要将kubesphere-controls-system下对应的Ingress控制器重启:

kubectl -n kubesphere-controls-system rollout restart deploy kubesphere-router-test

检查此时使用了新版本的Istio:

# kubectl -n test get po productpage-v1-77bbd8f85d-8njf8 -oyaml | grep 'image: '

- image: kubesphere/examples-bookinfo-productpage-v1:1.13.0

image: istio/proxyv2:1.6.10

image: istio/proxyv2:1.6.10

image: istio/proxyv2:1.6.10

image: kubesphere/examples-bookinfo-productpage-v1:1.13.0

image: istio/proxyv2:1.6.10

最后,检查应用服务治理及灰度发布均正常,在开启Tracing时,流量追踪同样正常,至此全部完成。

注意:重启服务时会有短暂的服务中断,请选择在业务访问较小时选择重启。

注意

升级完成后,建议找一个受影响小的服务,升级下sidecar

kubectl rollout restart deploy xxx -n test

然后检查下该服务是否正常,如果有问题,把istiod-1.6.10临时禁用。禁用方法:

- 备份 istiod-injector

kubectl get mutatingwebhookconfigurations.admissionregistration istio-sidecar-injector-1-6-10 -oyaml > istio-sidecar-injector-1-6-10.yaml

- 删除istiod-injector

kubectl delete mutatingwebhookconfigurations.admissionregistration.k8s.io istio-sidecar-injector-1-6-10

此时,新加的服务还是会使用老版本的1.4.8 。

- 重新启用istio-1.6.10

重新启用1.6.10可以直接把istiod-injector还原,在apply该yaml时需要修改个配置(也可以直接apply下,看下相应的报错):

sed -i 's/sideEffects: Unknown/sideEffects: None/' istio-sidecar-injector-1-6-10.yaml

此时apply就没有问题:

kubectl apply -f istio-sidecar-injector-1-6-10.yaml

最后,如果有问题,别忘了同步到社区 ^-^