创建部署问题时,请参考下面模板,你提供的信息越多,越容易及时获得解答。

发帖前请点击 发表主题 右边的 预览(👀) 按钮,确保帖子格式正确。

你只花一分钟创建的问题,不能指望别人花上半个小时给你解答。

操作系统信息

物理机,Ubuntu22

Kubernetes版本信息

v1.26.12,多节点。

容器运行时

containerd,1.7

KubeSphere版本信息

例如:v4.1.2,kk离线安装

问题是什么

把maven-pod模版中的podman 镜像缓存目录持久化挂载到宿主机报错:

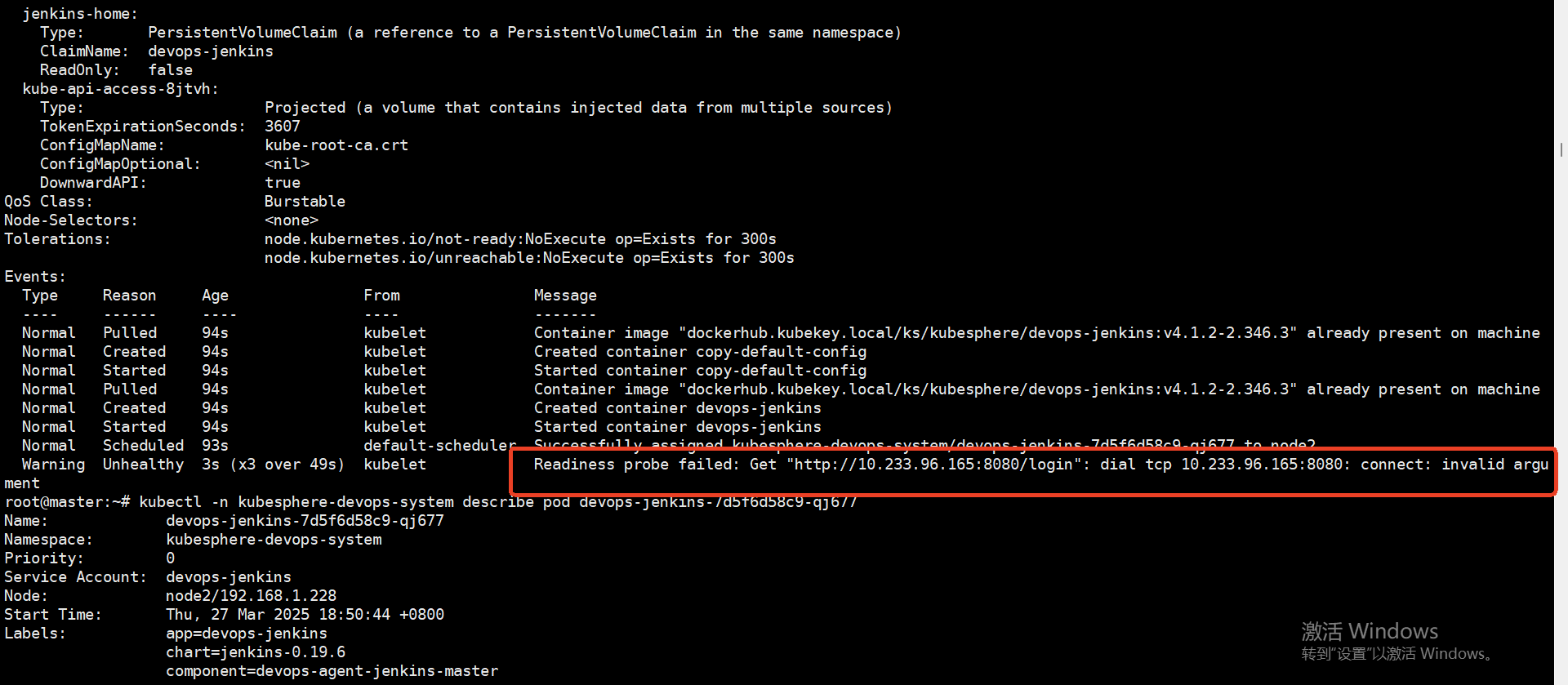

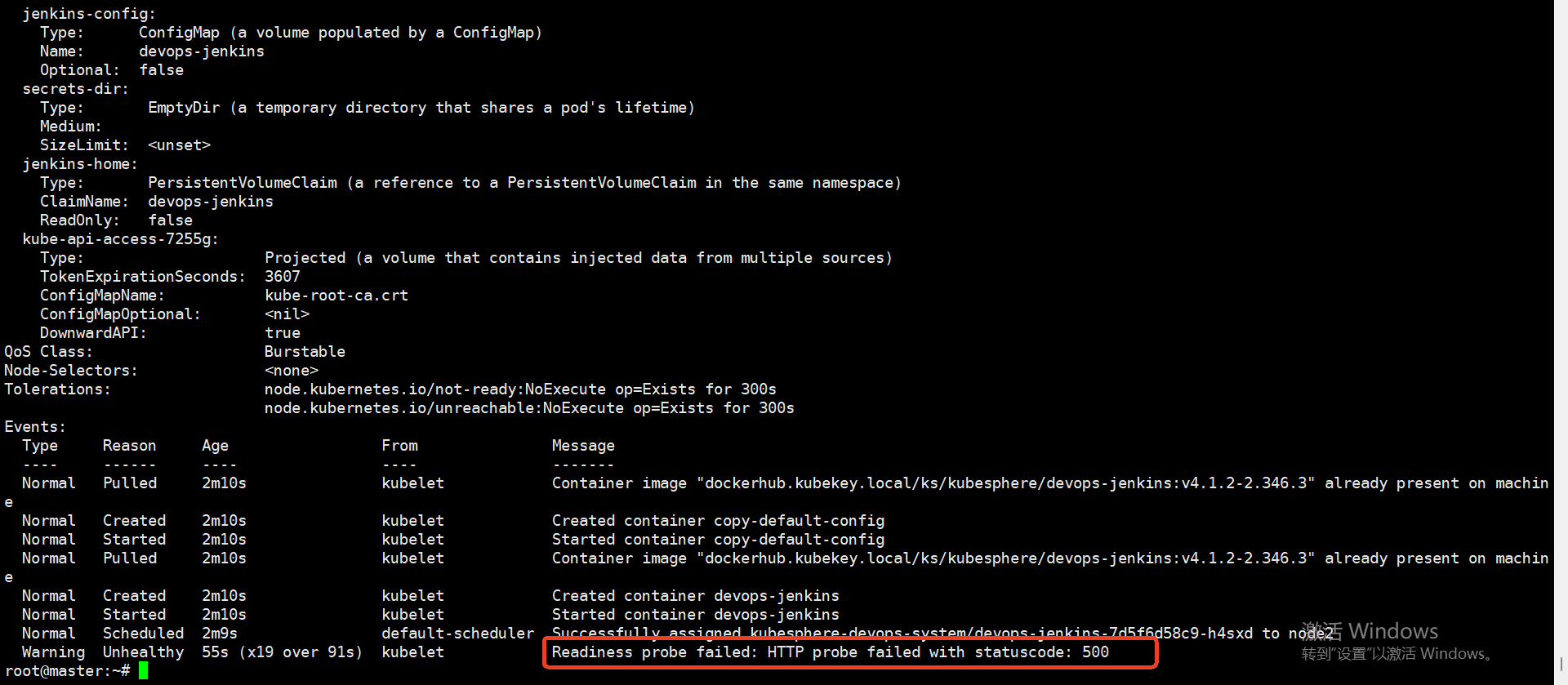

devops-jekins 日志报错:

我知道是挂载的问题,因为把挂载删掉,上面的报错就消失了;应当如何正确的持久化挂载呢?

下面是我原始的 maven yaml 配置,可以指教一下怎么把容器内的镜像缓存目录/var/lib/containers/storage 持久化到宿主机上?是要先在宿主机拷贝出来再挂载吗?下面是正常无报错的原始配置文件

- name: "maven"

namespace: "kubesphere-devops-worker"

label: "maven"

nodeUsageMode: "EXCLUSIVE"

idleMinutes: 0

containers:

- name: "maven"

image: "dockerhub.kubekey.local/ks/kubesphere/builder-maven:v3.2.0-podman"

command: "cat"

args: ""

ttyEnabled: true

privileged: true

resourceRequestCpu: "8000m"

resourceLimitCpu: "16000m"

resourceRequestMemory: "8Gi"

resourceLimitMemory: "16Gi"

- name: "jnlp"

image: "dockerhub.kubekey.local/ks/jenkins/inbound-agent:4.10-2"

args: "^${computer.jnlpmac} ^${computer.name}"

resourceRequestCpu: "8000m"

resourceLimitCpu: "16000m"

resourceRequestMemory: "8Gi"

resourceLimitMemory: "16Gi"

workspaceVolume:

emptyDirWorkspaceVolume:

memory: false

volumes:

- hostPathVolume:

hostPath: "/var/run/docker.sock"

mountPath: "/var/run/docker.sock"

- hostPathVolume:

hostPath: "/var/data/jenkins_maven_cache"

mountPath: "/root/.m2"

- hostPathVolume:

hostPath: "/var/data/jenkins_sonar_cache"

mountPath: "/root/.sonar/cache"

yaml: |

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/worker

operator: In

values:

- ci

tolerations:

- key: "node.kubernetes.io/ci"

operator: "Exists"

effect: "NoSchedule"

- key: "node.kubernetes.io/ci"

operator: "Exists"

effect: "PreferNoSchedule"

containers:

- name: "maven"

resources:

requests:

ephemeral-storage: "1Gi"

limits:

ephemeral-storage: "10Gi"

volumeMounts:

- name: config-volume

mountPath: /opt/apache-maven-3.5.3/conf/settings.xml

subPath: settings.xml

volumes:

- name: config-volume

configMap:

name: ks-devops-agent

items:

- key: MavenSetting

path: settings.xml

securityContext:

fsGroup: 0

runAsUser: 0