CauchyK零SK壹S

CauchyK零SK壹S- 已编辑

前言

Kubernetes 要在 v1.20 开始弃用 docker 了 ?其实是弃用 kubelet 中集成的 dockershim 模块, 也就是说不再将 docker 作为默认的 container runtime,不过应该还是可以通过外接方式使用docker的。感兴趣的同学可以通过以下链接了解个中缘由。

https://kubernetes.io/blog/2020/12/02/dockershim-faq

https://kubernetes.io/blog/2020/12/02/dont-panic-kubernetes-and-docker

这里先不讨论 docker, 主要记录如何使用除 docker 之外的其它 container runtime 部署 kubernetes 集群。值得注意的是,只有符合 CRI(Container Runtime Interface)标准的 container runtime 才可以对接 kubernetes。

本文主要介绍如何使用以下三种cri-runtime部署kubernetes集群:

- containerd: https://containerd.io

- cri-o: https://cri-o.io

- isula: https://gitee.com/openeuler/iSulad

集群部署

首先部署container-runtime,containerd、cri-o、isula任选其一,注意集群中所有节点container-runtime应保持一致。

部署contaner-runtime

containerd

# 1. 安装 runc

curl -OL https://github.com/opencontainers/runc/releases/download/v1.0.0-rc92/runc.amd64

mv runc.amd64 /usr/local/bin/runc && chmod +x /usr/local/bin/runc

# 2. 安装 containerd

curl -OL https://github.com/containerd/containerd/releases/download/v1.4.3/containerd-1.4.3-linux-amd64.tar.gz

tar -zxvf containerd-1.4.3-linux-amd64.tar.gz -C /usr/local

curl -o /etc/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/cri/master/contrib/systemd-units/containerd.service

# 3. 配置 containerd

mkdir -p /etc/containerd

cat > /etc/containerd/config.toml << EOF

[plugins]

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "kubesphere/pause:3.2"

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry-1.docker.io"] ## 这里可替换成dockerhub的镜像加速器

EOF

systemctl enable containerd && systemctl restart containerd

# 4. 安装 crictl

VERSION="v1.19.0"

curl -OL https://github.com/kubernetes-sigs/cri-tools/releases/download/$VERSION/crictl-$VERSION-linux-amd64.tar.gz

sudo tar zxvf crictl-$VERSION-linux-amd64.tar.gz -C /usr/local/bin

rm -f crictl-$VERSION-linux-amd64.tar.gz

# 5. 配置crictl

cat > /etc/crictl.yaml << EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 2

debug: false

pull-image-on-create: false

EOFcri-o

# 1. 安装cri-o

yum install git make

curl -OL https://github.com/cri-o/cri-o/releases/download/v1.18.4/crio-v1.18.4.tar.gz

tar -zxf crio-v1.18.4.tar.gz

cd crio-v1.18.4

mkdir -p /etc/crio /opt/cni/bin /usr/local/share/oci-umount/oci-umount.d /usr/local/lib/systemd/system

make install

echo "fs.may_detach_mounts=1" >> /etc/sysctl.conf

sysctl -p

# 2. 配置cri-o

vi /etc/crio/crio.conf

# 找到如下参数进行修改

pause_image = "kubesphere/pause:3.2"

registries = [

"docker.io" ## 这里可替换成dockerhub的镜像加速器

]

# 3. 启动crio

systemctl enable crio && systemctl restart crio isula (操作系统使用 openEuler 20.09)

# 1. 安装isula

yum install iSulad -y

# 2. 配置isula

vim /etc/isulad/daemon.json

# 对如下参数进行修改

"registry-mirrors": [

"docker.io" ## 这里可替换成dockerhub的镜像加速器

]

"pod-sandbox-image": "kubesphere/pause:3.2"

"network-plugin": "cni"

"cni-bin-dir": "/opt/cni/bin"

"cni-conf-dir": "/etc/cni/net.d"

# 3. 启动isula

systemctl enable isulad && systemctl restart isulad部署kubernetes

这里使用kubekey来部署kubernetes集群。

# 1. 下载kubekey

## 这里暂时使用kubekey v1.1.0-alpha.1部署kubernetes集群,该版本为预览版,支持多container-runtime也会包含在后续的正式版本中。

curl -OL https://github.com/kubesphere/kubekey/releases/download/v1.1.0-alpha.1/kubekey-v1.1.0-alpha.1-linux-amd64.tar.gz

tar -zxvf kubekey-v1.1.0-alpha.1-linux-amd64.tar.gz

# 2. 创建配置文件

./kk create config # 默认在同级目录下生成 config-sample.yaml

# 3. 根据真实环境信息修改配置文件

vi config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: node1, address: 192.168.6.3, internalAddress: 192.168.6.3, password: xxx}

- {name: node2, address: 192.168.6.4, internalAddress: 192.168.6.4, password: xxx}

roleGroups:

etcd:

- node1

master:

- node1

worker:

- node1

- node2

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.17.9

imageRepo: kubesphere

clusterName: cluster.local

containerManager: containerd ## 这里填入之前部署的container-runtime:containerd / crio / isula

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

addons: []

# 4. 部署集群

./kk create cluster -f config-sample.yaml --with-kubesphere

# 5. 等待集群部署完成查看部署结果

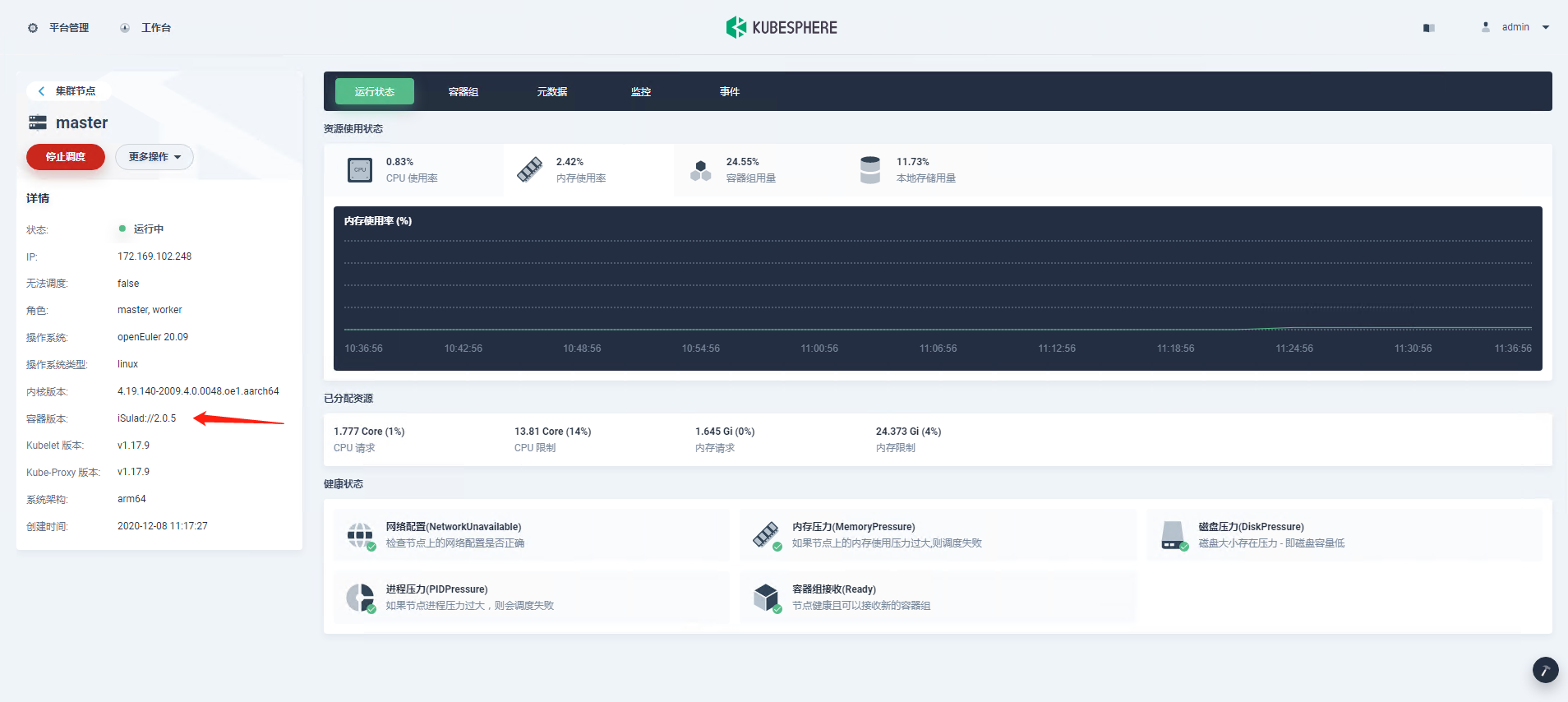

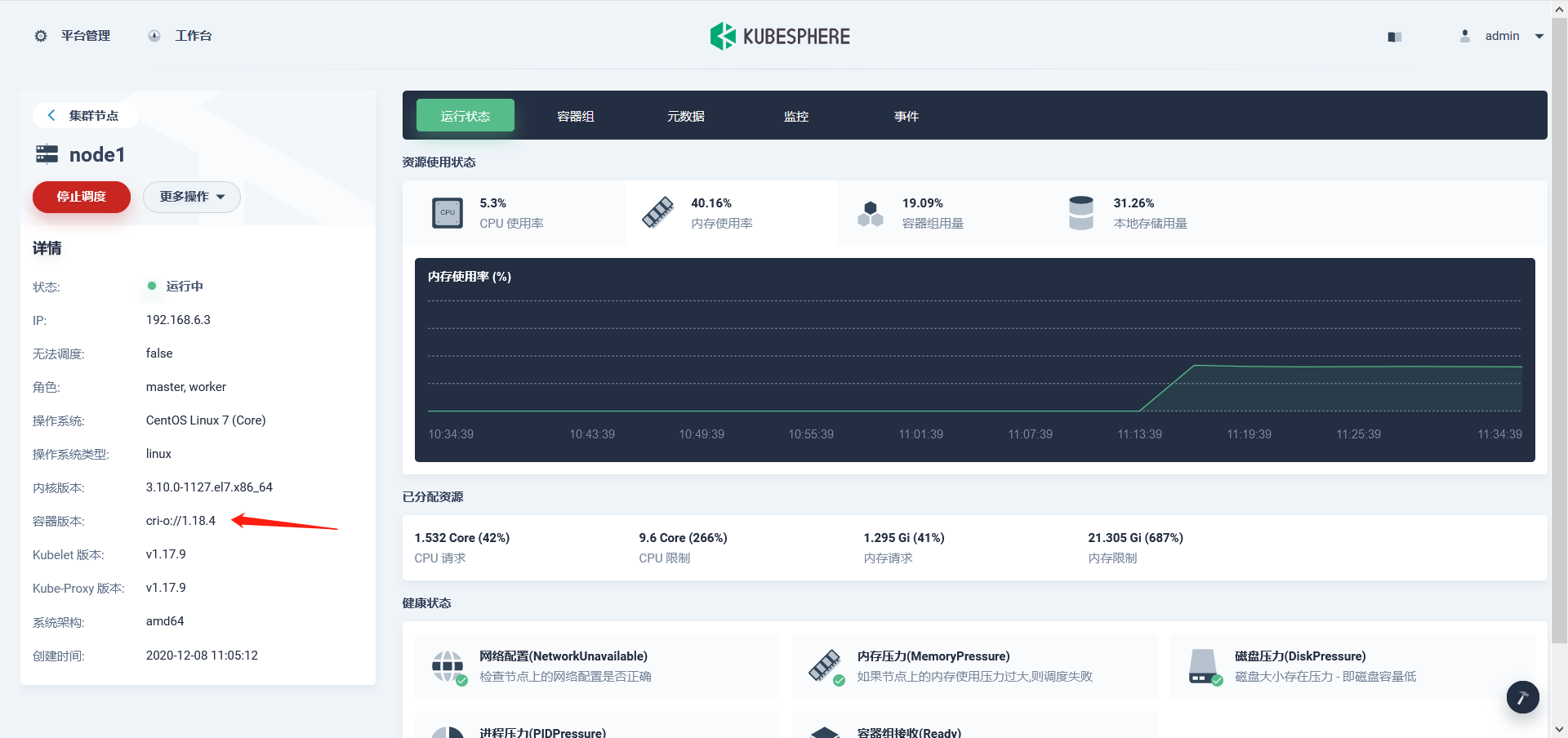

可登录kubesphere控制台查看集群节点所使用的container-runtime

containerd

crio

isula