本文档使用 KubeKey 作为制品和部署工具,具体参考官方文档,本文档仅作为按照官方文档进行实际操作时遇到的一些问题的补充。

制品制作

准备一台可以访问互联网的机器制作制品 artifact

- 执行以下命令下载 KubeKey v3.0.7 并解压:

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

- 在联网主机上执行以下命令,并复制示例中的 manifest 内容。(本示例已 Debian 11 系统部署 K8S 1.23.15 版本为例,并包含 KubeSphere 3.3.1 基础的相关镜像)

vim manifest.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- amd64

operatingSystems:

- arch: amd64

type: linux

id: debian

version: "11"

osImage: Debian GNU/Linux 11 (bullseye)

repository:

iso:

localPath: ./iso/debian-11-amd64.iso

url:

kubernetesDistributions:

- type: kubernetes

version: v1.23.15

components:

helm:

version: v3.9.0

cni:

version: v0.9.1

etcd:

version: v3.4.13

containerRuntimes:

- type: containerd

version: 1.6.4

crictl:

version: v1.24.0

##

docker-registry:

version: "2"

# harbor:

# version: v2.4.1

# docker-compose:

# version: v2.2.2

images:

- registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.23.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.8.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v2.5.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.22.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v1.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v1.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v1.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.34.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0

registry:

auths: {}

备注

- 若需要导出的 artifact 文件中包含操作系统依赖文件(如:conntarck、chrony 等),可在 operationSystem 元素中的 .repostiory.iso.url 中配置相应的 ISO 依赖文件下载地址或者提前下载 ISO 包到本地在 localPath 里填写本地存放路径并删除 url 配置项。

- 开启 harbor 和 docker-compose 配置项,为后面通过 KubeKey 自建 harbor 仓库推送镜像使用。

- 默认创建的 manifest 里面的镜像列表从 docker.io 获取。

- 可根据实际情况修改 manifest.yaml 文件的内容,用于之后导出期望的 artifact 文件。

- 您可以访问 https://github.com/kubesphere/kubekey/releases/tag/v3.0.2 下载 ISO 文件。

- (可选)如果您已经拥有集群,那么可以在已有集群中执行 KubeKey 命令生成 manifest 文件,并参照步骤 2 中的示例配置 manifest 文件内容。

./kk create manifest

- 生成并导出制品 artifact。

./kk artifact export -m manifest.yaml -o kubesphere.tar.gz

离线安装集群

- 将下载的 kk 和制品 kubesphere.tar.gz 通过 U 盘等介质拷贝至离线环境安装节点。

- 创建离线集群配置文件。

vim config-offline.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: local-k8s

spec:

hosts:

# 各节点 name 需先手工添加到 /etc/hosts 中

- {name: local-k8s-test01, address: 172.18.2.80, internalAddress: 172.18.2.80, privateKeyPath: "~/.ssh/id_rsa"}

# - {name: node1, address: 192.168.0.4, internalAddress: 192.168.0.4, user: root, password: "<REPLACE_WITH_YOUR_ACTUAL_PASSWORD>"}

roleGroups:

etcd:

- local-k8s-test01

control-plane:

- local-k8s-test01

worker:

- local-k8s-test01

# 如需使用 kk 自动部署镜像仓库,请设置该主机组 (生成环境建议仓库与集群分离部署,减少相互影响)

registry:

- local-k8s-test01

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.k8s.local

address: ""

port: 6443

kubernetes:

version: v1.23.15

imageRepo: kubesphere

clusterName: k8s.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

# 如需使用 kk 部署 harbor, 可将该参数设置为 harbor,不设置该参数且需使用 kk 创建容器镜像仓库,将默认使用docker registry。

#type: harbor

# 如使用 kk 部署的 harbor 或其他需要登录的仓库,可设置对应仓库的auths,如使用 kk 创建的 docker registry 仓库,则无需配置该参数。

# 注意:如使用 kk 部署 harbor,该参数请于 harbor 启动后设置。

#auths:

# "dockerhub.kubekey.local":

# username: admin

# password: Harbor12345

# 设置集群部署时使用的私有仓库

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

auths:

"dockerhub.kubekey.local":

skipTLSVerify: true

addons: []

- 节点初始化(仅作参考,根据实际情况调整)

# 集群各节点先要进行一些依赖的初始化安装

apt update

apt install chrony curl socat conntrack ebtables ipset ipvsadm nfs-common

echo "options sunrpc tcp_slot_table_entries=128" >> /etc/modprobe.d/sunrpc.conf

echo "options sunrpc tcp_max_slot_table_entries=128" >> /etc/modprobe.d/sunrpc.conf

# 修改时区

rm -rf /etc/localtime

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# 调整 ulimit

cat >> /etc/security/limits.conf <<EOF

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

EOF

重启各节点

(可选)安装本地镜像仓库。

确保 kk、kubesphere.tar.gz、config-offline.yaml 在当前同一目录

确保部署节点可以 ssh 登录各节点

有关完整的部署介绍,如使用 harbor 作为本地仓库服务,详情参考官方文档

./kk init registry -f config-offline.yaml -a kubesphere.tar.gz

- 执行以下命令安装 KubeSphere 集群:

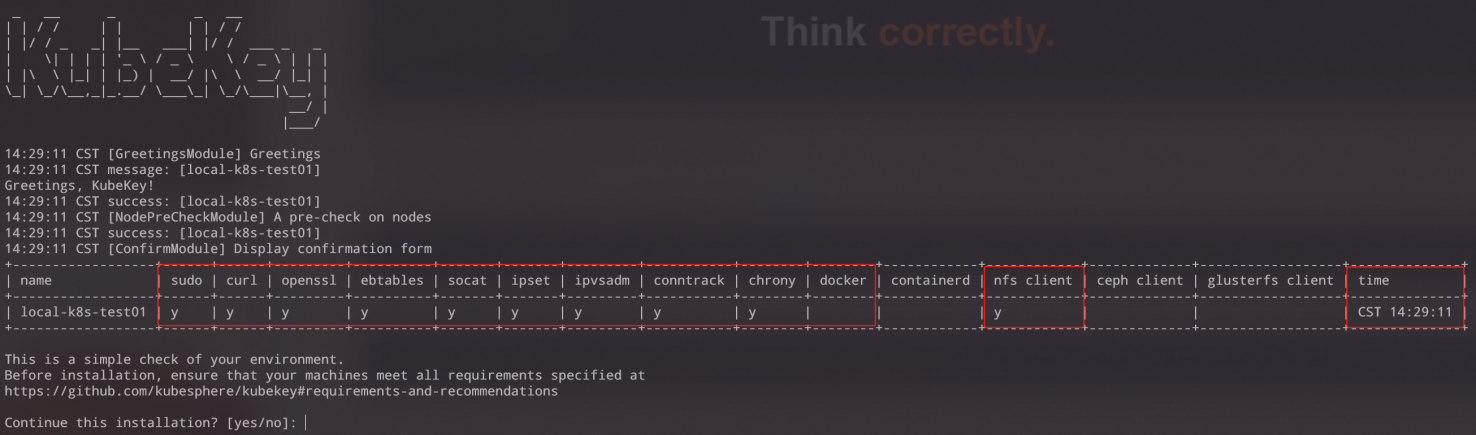

./kk create cluster -f config-offline.yaml -a kubesphere.tar.gz --with-packages

在确认这些依赖和时区、时间都正常后可以输入 yes 进行安装,否则请安装相关依赖后再进行。

安装完成后,您会看到以下内容:

15:12:51 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl get pod -A

执行以下命令查看集群状态:

kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6b5bbdbbcc-lfvlc 1/1 Running 0 4m1s

kube-system calico-node-54p4b 1/1 Running 0 4m1s

kube-system coredns-5bb9675886-fbhjv 1/1 Running 0 4m4s

kube-system coredns-5bb9675886-gg9bs 1/1 Running 0 4m4s

kube-system kube-apiserver-local-k8s-test01 1/1 Running 0 4m12s

kube-system kube-controller-manager-local-k8s-test01 1/1 Running 0 4m17s

kube-system kube-proxy-82v2h 1/1 Running 0 4m1s

kube-system kube-scheduler-local-k8s-test01 1/1 Running 0 4m11s

kube-system nodelocaldns-n6fqg 1/1 Running 0 4m3s

当所有 Pod 状态都为 Running 时则说明部署成功。

附件:

iso/debian-11-amd64.iso

下载地址:https://github.com/kubesphere/kubekey/releases/download/v3.0.7/debian10-debs-amd64.iso

下载后重命名即可,兼容