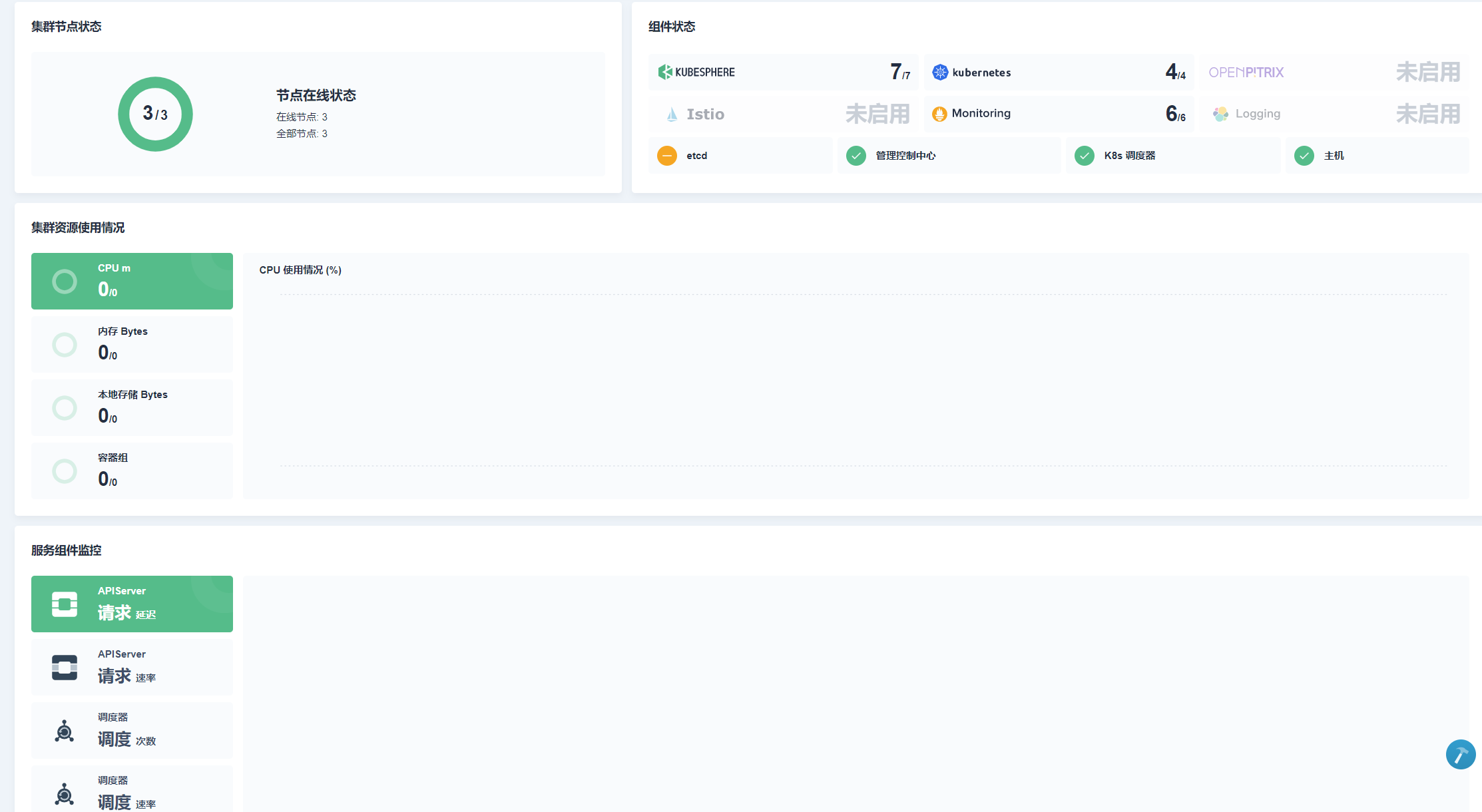

又重装了一遍k8s,然后最小化安装成功,但是监控中心不显示CPU、RAM信息。

查看prometheus状态有个请求超时

查看ks-apiserver日志也全是请求超时

...

E0701 09:41:37.153095 1 prometheus.go:61] Get http://prometheus-k8s.kubesphere-monitoring-system.svc:9090/api/v1/query_range?end=1593596482.969&query=round%28sum+by+%28namespace%2C+pod%2C+container%29+%28irate%28container_cpu_usage_seconds_total%7Bjob%3D%22kubelet%22%2C+container%21%3D%22POD%22%2C+container%21%3D%22%22%2C+image%21%3D%22%22%2C+pod%3D%22minio-845b7bd867-wc8fb%22%2C+namespace%3D%22kubesphere-system%22%2C+container%3D%22minio%22%7D%5B5m%5D%29%29%2C+0.001%29&start=1593578482.969&step=300s: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

E0701 09:41:37.153149 1 prometheus.go:61] Get http://prometheus-k8s.kubesphere-monitoring-system.svc:9090/api/v1/query_range?end=1593596482.969&query=sum+by+%28namespace%2C+pod%2C+container%29+%28container_memory_working_set_bytes%7Bjob%3D%22kubelet%22%2C+container%21%3D%22POD%22%2C+container%21%3D%22%22%2C+image%21%3D%22%22%2C+pod%3D%22minio-845b7bd867-wc8fb%22%2C+namespace%3D%22kubesphere-system%22%2C+container%3D%22minio%22%7D%29&start=1593578482.969&step=300s: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

E0701 09:41:48.518936 1 prometheus.go:61] Get http://prometheus-k8s.kubesphere-monitoring-system.svc:9090/api/v1/query_range?end=1593596494.492&query=round%28sum+by+%28namespace%2C+pod%29+%28irate%28container_cpu_usage_seconds_total%7Bjob%3D%22kubelet%22%2C+pod%21%3D%22%22%2C+image%21%3D%22%22%7D%5B5m%5D%29%29+%2A+on+%28namespace%2C+pod%29+group_left%28owner_kind%2C+owner_name%29+kube_pod_owner%7B%7D+%2A+on+%28namespace%2C+pod%29+group_left%28node%29+kube_pod_info%7Bpod%3D~%22minio-make-bucket-job-rjl6v%7Cminio-845b7bd867-wc8fb%24%22%2C+namespace%3D%22kubesphere-system%22%7D%2C+0.001%29&start=1593594694.492&step=60s: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

修改ks-installer添加devops后查看日志minio依然部署失败

TASK [common : Kubesphere | Deploy minio] **************************************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/helm upgrade --install ks-minio /etc/kubesphere/minio-ha -f /etc/kubesphere/custom-values-minio.yaml --set fullnameOverride=minio --namespace kubesphere-system --wait --timeout 1800\n", "delta": "0:30:25.859334", "end": "2020-07-01 08:55:10.418415", "msg": "non-zero return code", "rc": 1, "start": "2020-07-01 08:24:44.559081", "stderr": "Error: timed out waiting for the condition", "stderr_lines": ["Error: timed out waiting for the condition"], "stdout": "Release \"ks-minio\" does not exist. Installing it now.", "stdout_lines": ["Release \"ks-minio\" does not exist. Installing it now."]}

...ignoring

TASK [common : debug] **********************************************************

ok: [localhost] => {

"msg": [

"1. check the storage configuration and storage server",

"2. make sure the DNS address in /etc/resolv.conf is available.",

"3. execute 'helm del --purge ks-minio && kubectl delete job -n kubesphere-system ks-minio-make-bucket-job'",

"4. Restart the installer pod in kubesphere-system namespace"

]

}

在控制台查看minio已经状态正常:

但是有一个请求一直超时:

mino-mc一直日志显示一直请求超时:

Connecting to Minio server: http://minio:9000

mc: Unable to initialize new config from the provided credentials. Get http://minio:9000/probe-bucket-sign-eoko62s6i0sr/?location=: dial tcp: i/o timeout.

"Failed attempts: 1"

mc: Unable to initialize new config from the provided credentials. Get http://minio:9000/probe-bucket-sign-pwots1nwkmkw/?location=: dial tcp: i/o timeout.

"Failed attempts: 2"

mc: Unable to initialize new config from the provided credentials. Get http://minio:9000/probe-bucket-sign-oiez46q5nibc/?location=: dial tcp: i/o timeout.

"Failed attempts: 3"

... ...

@Forest-L 的方法也尝试了,社区中也找不到解决的方法。

这到底是啥原因导致的问题,现在完全没有头绪,麻烦帮忙看一下吧。

@Cauchy @rainwu