- 已编辑

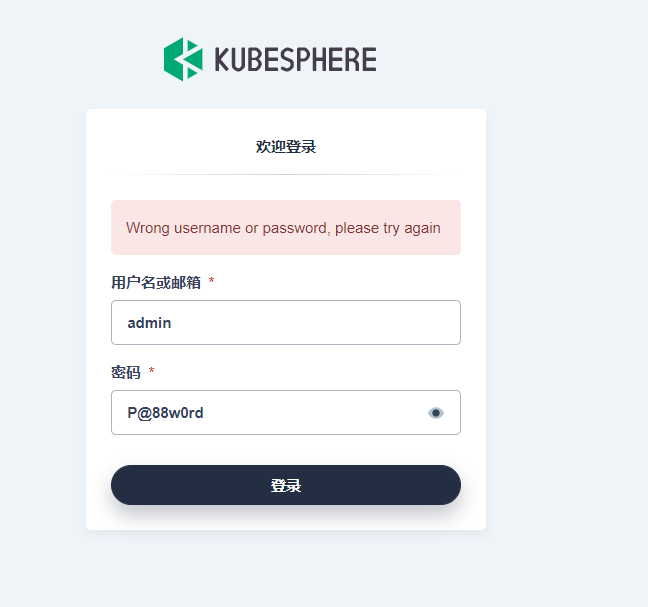

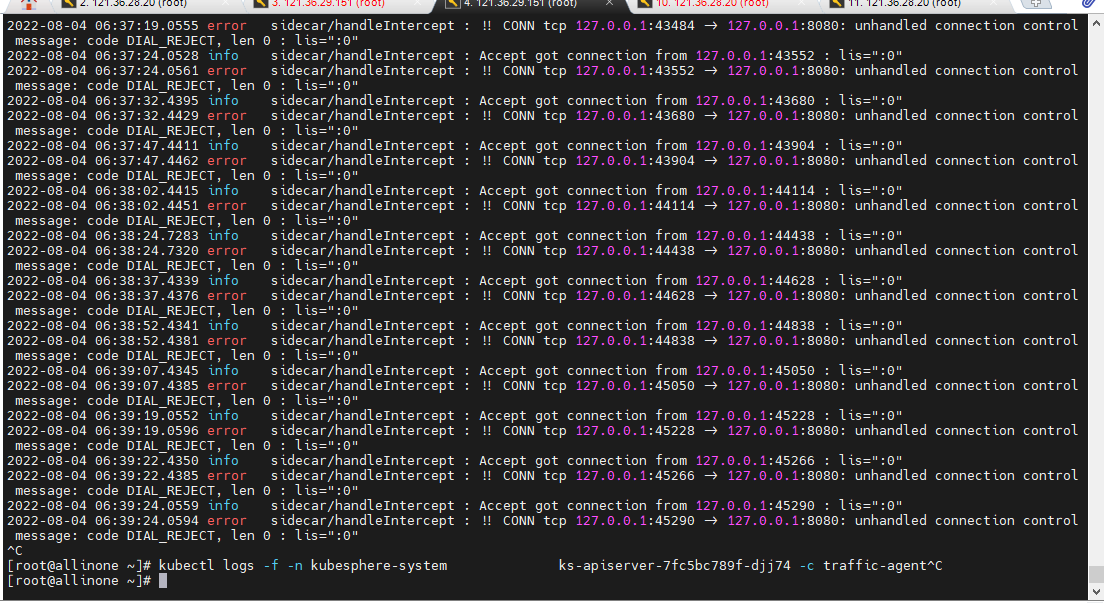

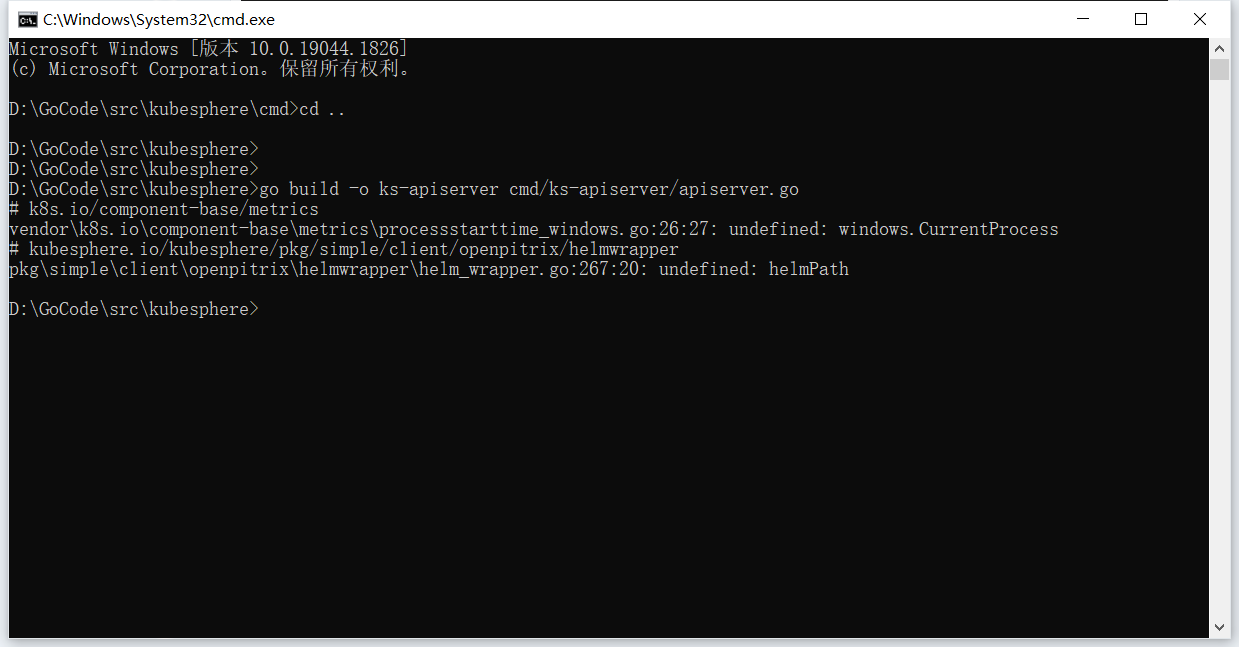

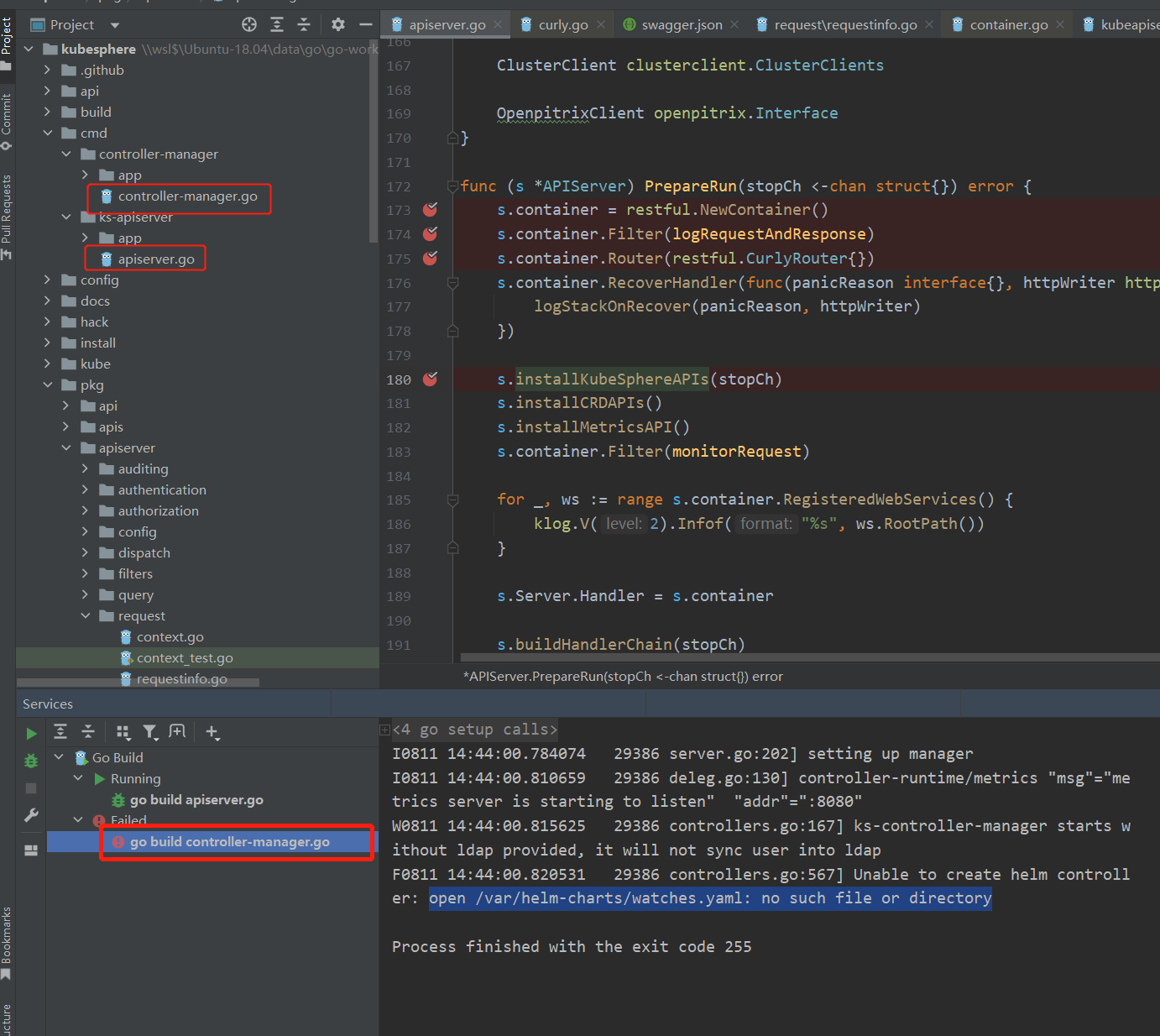

我也是本地启动了ks-apiserver然后telepresence替换远程pod之后网页无法访问,

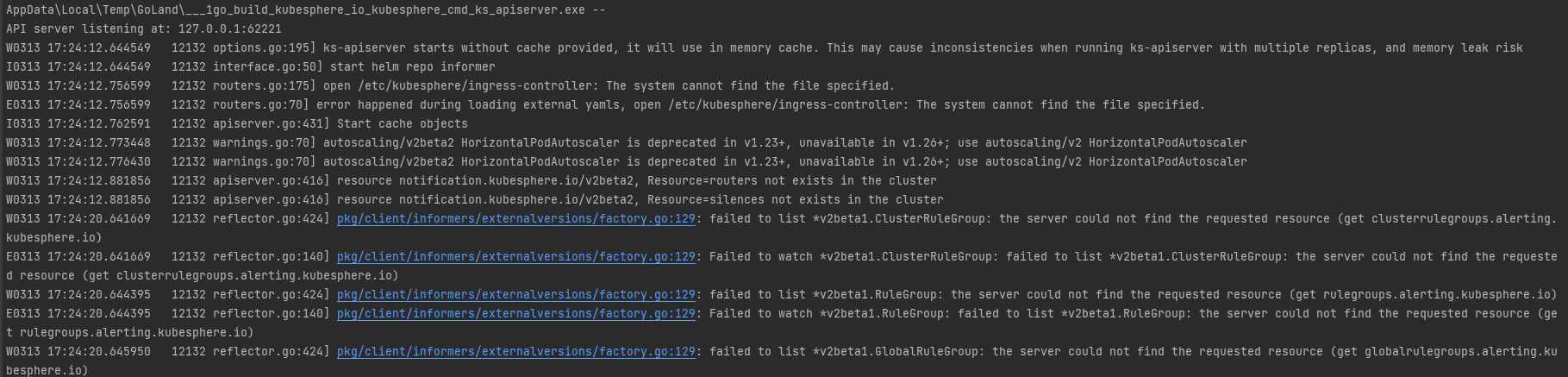

~/go/src/kubesphere.io/kubesphere/bin/cmd/ks-apiserver --kubeconfig ~/.kube/config输出:

W0812 11:26:05.645335 16149 options.go:178] ks-apiserver starts without redis provided, it will use in memory cache. This may cause inconsistencies when running ks-apiserver with multiple replicas.

W0812 11:26:05.678203 16149 routers.go:174] open /etc/kubesphere/ingress-controller: no such file or directory

E0812 11:26:05.678244 16149 routers.go:69] error happened during loading external yamls, open /etc/kubesphere/ingress-controller: no such file or directory

I0812 11:26:05.685234 16149 apiserver.go:359] Start cache objects

W0812 11:26:05.803593 16149 apiserver.go:490] resource iam.kubesphere.io/v1alpha2, Resource=groups not exists in the cluster

W0812 11:26:05.803652 16149 apiserver.go:490] resource iam.kubesphere.io/v1alpha2, Resource=groupbindings not exists in the cluster

W0812 11:26:05.803720 16149 apiserver.go:490] resource iam.kubesphere.io/v1alpha2, Resource=groups not exists in the cluster

W0812 11:26:05.803741 16149 apiserver.go:490] resource iam.kubesphere.io/v1alpha2, Resource=groupbindings not exists in the cluster

W0812 11:26:05.803781 16149 apiserver.go:490] resource network.kubesphere.io/v1alpha1, Resource=ippools not exists in the cluster

I0812 11:26:06.305088 16149 apiserver.go:563] Finished caching objects

I0812 11:26:06.305163 16149 apiserver.go:286] Start listening on :9090telepresence --namespace kubesphere-system --swap-deployment ks-apiserver --also-proxy redis.kubesphere-system.svc --also-proxy openldap.kubesphere-system.svc

输出:

T: Using a Pod instead of a Deployment for the Telepresence proxy. If you experience problems, please file an issue!

T: Set the environment variable TELEPRESENCE_USE_DEPLOYMENT to any non-empty value to force the old behavior, e.g.,

T: env TELEPRESENCE_USE_DEPLOYMENT=1 telepresence --run curl hello

T: Starting proxy with method 'vpn-tcp', which has the following limitations: All processes are affected, only one telepresence can run per machine, and you can't use other VPNs. You may need to add cloud hosts and headless services with --also-proxy. For a full list

T: of method limitations see https://telepresence.io/reference/methods.html

T: Volumes are rooted at $TELEPRESENCE_ROOT. See https://telepresence.io/howto/volumes.html for details.

T: Starting network proxy to cluster by swapping out Deployment ks-apiserver with a proxy Pod

T: Forwarding remote port 9090 to local port 9090.

T: Setup complete. Launching your command.

@kubernetes-admin@cluster.local|bash-4.3# kubesphere.yaml:

authentication:

authenticateRateLimiterMaxTries: 10

authenticateRateLimiterDuration: 10m0s

loginHistoryRetentionPeriod: 168h

maximumClockSkew: 10s

multipleLogin: False

kubectlImage: kubesphere/kubectl:v1.0.0

jwtSecret: "OYBwwbPevij4SfbRXaolQSxCEyx84gEk"

authorization:

mode: "AlwaysAllow"

ldap:

host: openldap.kubesphere-system.svc:389

managerDN: cn=admin,dc=kubesphere,dc=io

managerPassword: admin

userSearchBase: ou=Users,dc=kubesphere,dc=io

groupSearchBase: ou=Groups,dc=kubesphere,dc=io

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090看telepresence的日志输出是

[19] SSH port forward (socks and proxy poll): exit 0

185.2 22 | s: SW#594:10.233.0.3:53: deleting (14 remain)

185.2 22 | s: SW'unknown':Mux#613: deleting (13 remain)

185.2 22 | s: SW#602:10.233.0.3:53: deleting (12 remain)

185.2 22 | s: SW'unknown':Mux#623: deleting (11 remain)

185.2 22 | s: SW#612:10.233.0.3:53: deleting (10 remain)

185.2 22 | s: SW'unknown':Mux#648: deleting (9 remain)

185.2 22 | s: SW#637:10.233.0.3:53: deleting (8 remain)

185.2 22 | s: SW'unknown':Mux#649: deleting (7 remain)

185.2 22 | s: SW#638:10.233.0.3:53: deleting (6 remain)

185.2 22 | s: SW#-1:10.233.78.64:389: deleting (5 remain)

185.2 22 | s: SW'unknown':Mux#940: deleting (4 remain)

185.2 22 | s: SW#-1:10.233.78.64:389: deleting (3 remain)

185.2 22 | s: SW'unknown':Mux#941: deleting (2 remain)

185.2 22 | s: SW'unknown':Mux#939: deleting (1 remain)

185.2 22 | s: SW#17:10.233.0.3:53: deleting (0 remain)

185.3 TEL | [22] sshuttle: exit -15本地curl可以访问

curl -v http://10.233.78.223:9090/kapis/resources.kubesphere.io/v1alpha3/deployments

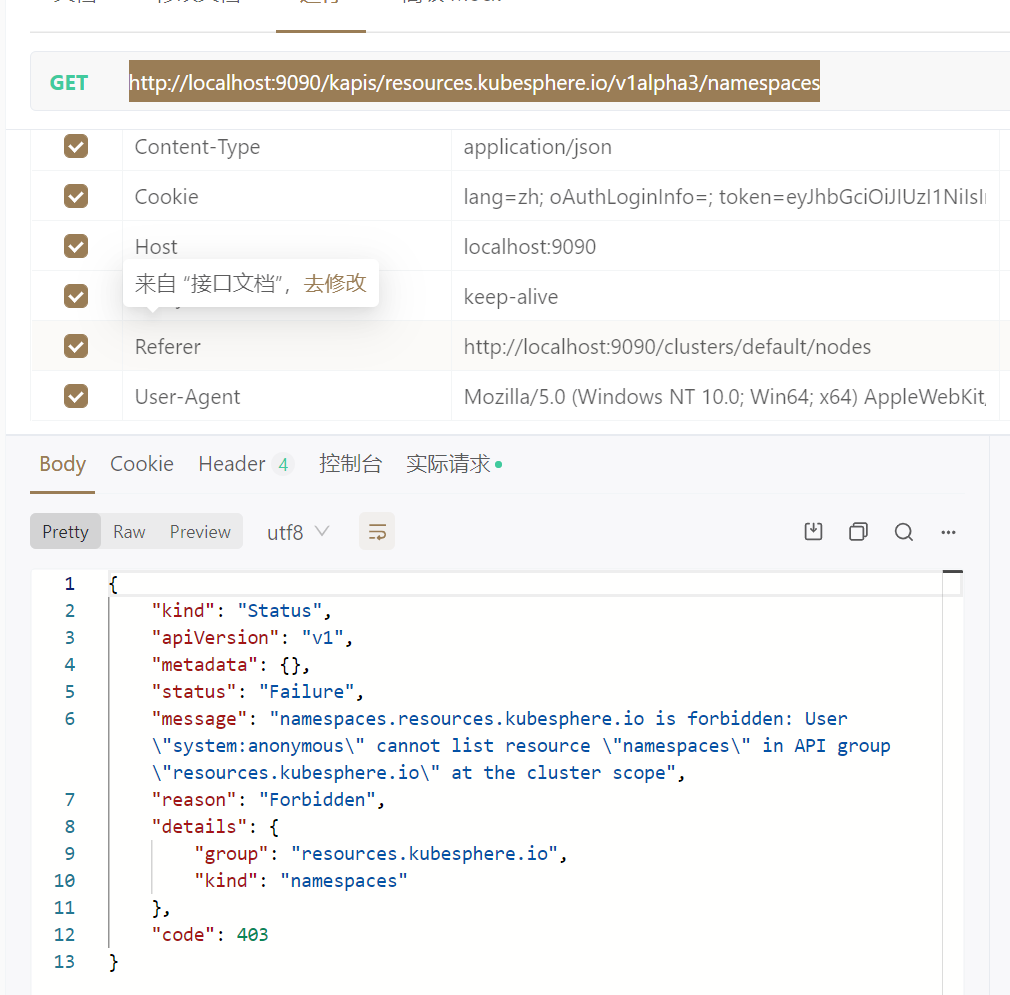

网页api全部无法访问

@kubernetes-admin@cluster.local|bash-4.3# curl -v http://10.12.75.55:30880/kapis/config.kubesphere.io/v1alpha2/configs/configz

* Trying 10.12.75.55...

* Connected to 10.12.75.55 (10.12.75.55) port 30880 (#0)

> GET /kapis/config.kubesphere.io/v1alpha2/configs/configz HTTP/1.1

> Host: 10.12.75.55:30880

> User-Agent: curl/7.47.0

> Accept: */*

>

* Empty reply from server

* Connection #0 to host 10.12.75.55 left intact

curl: (52) Empty reply from server