- 已编辑

操作系统信息

虚拟机 : centos 7.6.1810

Kubernetes版本信息

[root@master-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master-01 Ready control-plane,master 6d4h v1.21.0

master-02 Ready control-plane,master 6d4h v1.21.0

master-03 Ready control-plane,master 6d4h v1.21.0

node-01 Ready <none> 6d4h v1.21.0

node-02 Ready <none> 5d5h v1.21.0

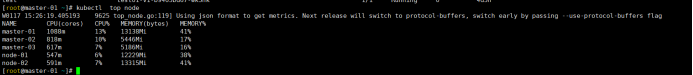

[root@master-01 ~]# kubectl top node

W0117 16:16:55.836070 4321 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master-01 1098m 13% 13235Mi 41%

master-02 563m 7% 5402Mi 16%

master-03 637m 7% 5235Mi 16%

node-01 984m 12% 12257Mi 38%

node-02 746m 9% 13332Mi 41% 容器运行时

docker server 版本为 19.03.2

详细信息如下:

docker version

Client: Docker Engine - Community

Version: 20.10.12

API version: 1.40

Go version: go1.16.12

Git commit: e91ed57

Built: Mon Dec 13 11:45:41 2021

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 19.03.2

API version: 1.40 (minimum version 1.12)

Go version: go1.12.8

Git commit: 6a30dfc

Built: Thu Aug 29 05:27:34 2019

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.12

GitCommit: 7b11cfaabd73bb80907dd23182b9347b4245eb5d

runc:

Version: 1.0.2

GitCommit: v1.0.2-0-g52b36a2

docker-init:

Version: 0.18.0

GitCommit: fec3683KubeSphere版本信息

已有k8s集群上,在线安装kubesphere: v3.2.1

问题是什么

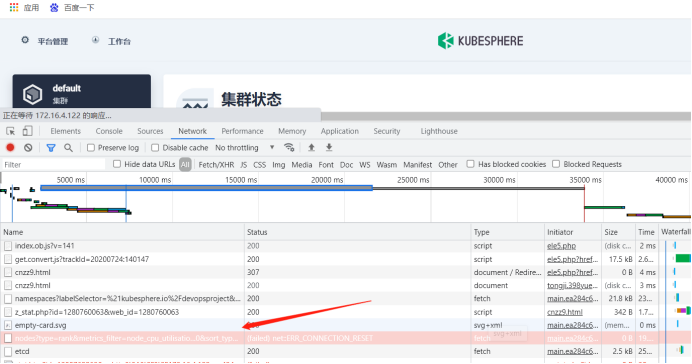

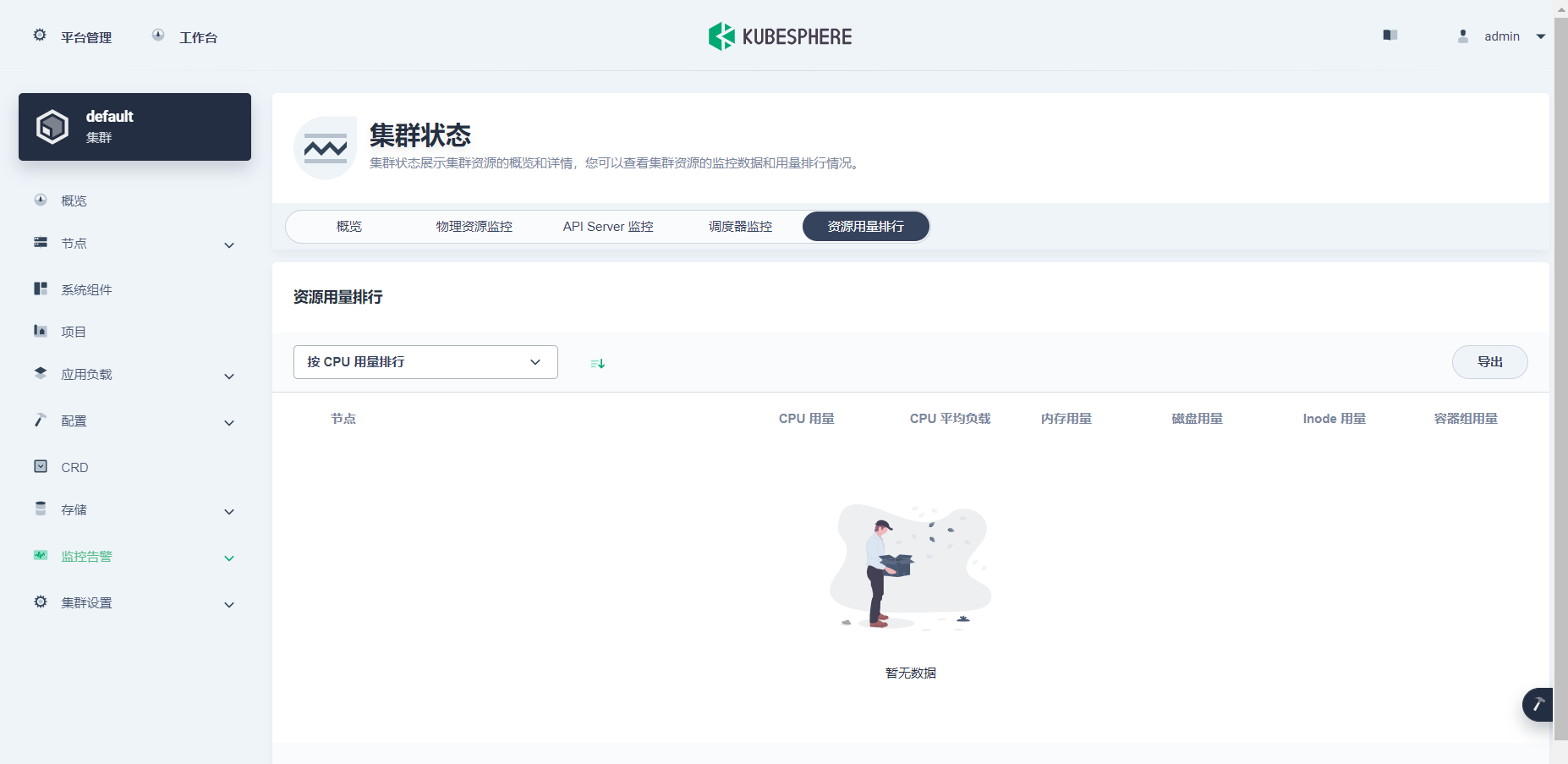

看板页面系统监控指标为空

异常详细描述如下

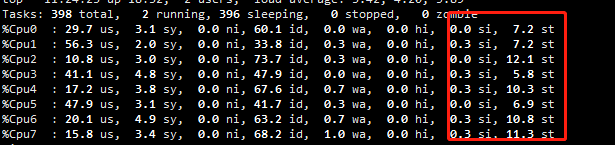

后台top查看正常

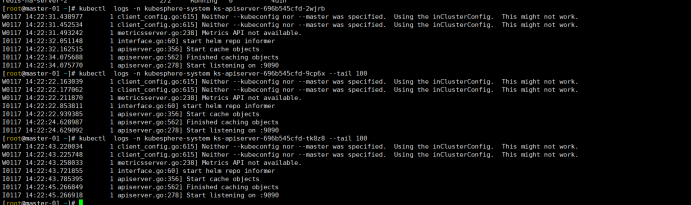

ks-console日志

ks-apiserver日志

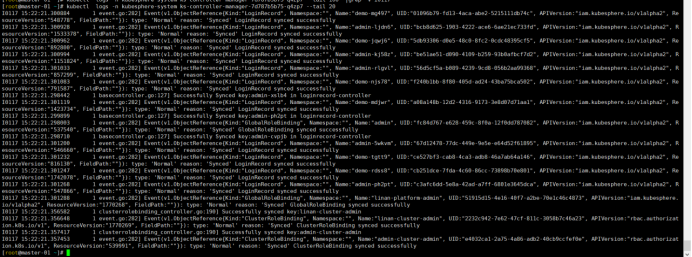

ks-controller-manager日志正常

apiserver metrics-server

metrics-server 日志如下

kubectl logs -n kube-system metrics-server-687cb5444-dlkpr

I0117 06:46:40.340023 1 serving.go:325] Generated self-signed cert (/tmp/apiserver.crt, /tmp/apiserver.key)

I0117 06:46:41.312568 1 configmap_cafile_content.go:202] Starting client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0117 06:46:41.312611 1 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0117 06:46:41.313168 1 configmap_cafile_content.go:202] Starting client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0117 06:46:41.313194 1 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0117 06:46:41.313231 1 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0117 06:46:41.313251 1 shared_informer.go:240] Waiting for caches to sync for RequestHeaderAuthRequestController

I0117 06:46:41.313854 1 secure_serving.go:197] Serving securely on [::]:4443

I0117 06:46:41.313970 1 dynamic_serving_content.go:130] Starting serving-cert::/tmp/apiserver.crt::/tmp/apiserver.key

I0117 06:46:41.314005 1 tlsconfig.go:240] Starting DynamicServingCertificateController

I0117 06:46:41.412789 1 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0117 06:46:41.413337 1 shared_informer.go:247] Caches are synced for RequestHeaderAuthRequestController

I0117 06:46:41.413337 1 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

,我也准备重装系统了

,我也准备重装系统了