离线安装 KubeSphere 2.1.1 与 Kubernetes

Jjhh452020K零S

Feynman

2020-03-22T15:04:52Z INFO : shell-operator v1.0.0-beta.5

2020-03-22T15:04:52Z INFO : HTTP SERVER Listening on 0.0.0.0:9115

2020-03-22T15:04:52Z INFO : Use temporary dir: /tmp/shell-operator

2020-03-22T15:04:52Z INFO : Initialize hooks manager …

2020-03-22T15:04:52Z INFO : Search and load hooks …

2020-03-22T15:04:52Z INFO : Load hook config from ‘/hooks/kubesphere/installRunner.py’

2020-03-22T15:04:52Z INFO : Initializing schedule manager …

2020-03-22T15:04:52Z INFO : KUBE Init Kubernetes client

2020-03-22T15:04:52Z INFO : KUBE-INIT Kubernetes client is configured successfully

2020-03-22T15:04:52Z INFO : MAIN: run main loop

2020-03-22T15:04:52Z INFO : MAIN: add onStartup tasks

2020-03-22T15:04:52Z INFO : Running schedule manager …

2020-03-22T15:04:52Z INFO : QUEUE add all HookRun@OnStartup

2020-03-22T15:04:52Z INFO : MSTOR Create new metric shell_operator_tasks_queue_length

2020-03-22T15:04:52Z INFO : MSTOR Create new metric shell_operator_live_ticks

2020-03-22T15:04:52Z INFO : GVR for kind ‘ConfigMap’ is /v1, Resource=configmaps

2020-03-22T15:04:52Z INFO : EVENT Kube event ‘188f16e7-3165-45a3-a67d-941e1eafc5c3′

2020-03-22T15:04:52Z INFO : QUEUE add TASK_HOOK_RUN@KUBE_EVENTS kubesphere/installRunner.py

2020-03-22T15:04:55Z INFO : TASK_RUN HookRun@KUBE_EVENTS kubesphere/installRunner.py

2020-03-22T15:04:55Z INFO : Running hook ‘kubesphere/installRunner.py’ binding ‘KUBE_EVENTS’ …

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match ‘all’

PLAY [localhost] ***************************************************************

TASK [download : include_tasks] ************************************************

skipping: [localhost]

TASK [download : Download items] ***********************************************

skipping: [localhost]

TASK [download : Sync container] ***********************************************

skipping: [localhost]

TASK [kubesphere-defaults : Configure defaults] ********************************

ok: [localhost] => {

“msg”: “Check roles/kubesphere-defaults/defaults/main.yml”

}

TASK [preinstall : check k8s version] ******************************************

changed: [localhost]

TASK [preinstall : init k8s version] *******************************************

ok: [localhost]

TASK [preinstall : Stop if kuernetes version is nonsupport] ********************

ok: [localhost] => {

“changed”: false,

“msg”: “All assertions passed”

}

TASK [preinstall : check helm status] ******************************************

changed: [localhost]

TASK [preinstall : Stop if Helm is not available] ******************************

ok: [localhost] => {

“changed”: false,

“msg”: “All assertions passed”

}

TASK [preinstall : check storage class] ****************************************

changed: [localhost]

TASK [preinstall : Stop if StorageClass was not found] *************************

ok: [localhost] => {

“changed”: false,

“msg”: “All assertions passed”

}

TASK [preinstall : check default storage class] ********************************

changed: [localhost]

TASK [preinstall : Stop if defaultStorageClass was not found] ******************

skipping: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=9 changed=4 unreachable=0 failed=0 skipped=4 rescued=0 ignored=0

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match ‘all’

PLAY [localhost] ***************************************************************

TASK [download : include_tasks] ************************************************

skipping: [localhost]

TASK [download : Download items] ***********************************************

skipping: [localhost]

TASK [download : Sync container] ***********************************************

skipping: [localhost]

TASK [kubesphere-defaults : Configure defaults] ********************************

ok: [localhost] => {

“msg”: “Check roles/kubesphere-defaults/defaults/main.yml”

}

TASK [metrics-server : Metrics-Server | Checking old installation files] *******

ok: [localhost]

TASK [metrics-server : Metrics-Server | deleting old prometheus-operator] ******

skipping: [localhost]

TASK [metrics-server : Metrics-Server | deleting old metrics-server files] *****

[DEPRECATION WARNING]: evaluating {‘failed’: False, u’stat’: {u’exists’:

False}, u’changed’: False} as a bare variable, this behaviour will go away and

you might need to add |bool to the expression in the future. Also see

CONDITIONAL_BARE_VARS configuration toggle.. This feature will be removed in

version 2.12. Deprecation warnings can be disabled by setting

deprecation_warnings=False in ansible.cfg.

ok: [localhost] => (item=metrics-server)

TASK [metrics-server : Metrics-Server | Getting metrics-server installation files] ***

changed: [localhost]

TASK [metrics-server : Metrics-Server | Creating manifests] ********************

changed: [localhost] => (item={u’type’: u’config’, u’name’: u’values’, u’file’: u’values.yaml’})

TASK [metrics-server : Metrics-Server | Check Metrics-Server] ******************

changed: [localhost]

TASK [metrics-server : Metrics-Server | Installing metrics-server] *************

changed: [localhost]

TASK [metrics-server : Metrics-Server | Installing metrics-server retry] *******

skipping: [localhost]

TASK [metrics-server : Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready] ***

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (60 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (59 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (58 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (57 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (56 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (55 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (54 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (53 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (52 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (51 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (50 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (49 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (48 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (47 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (46 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (45 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (44 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (43 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (42 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (41 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (40 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (39 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (38 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (37 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (36 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (35 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (34 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (33 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (32 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (31 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (30 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (29 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (28 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (27 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (26 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (25 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (24 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (23 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (22 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (21 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (20 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (19 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (18 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (17 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (16 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (15 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (14 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (13 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (12 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (11 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (10 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (9 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (8 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (7 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (6 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (5 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (4 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (3 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (2 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (1 retries left).

fatal: [localhost]: FAILED! => {“attempts”: 60, “changed”: true, “cmd”: “/usr/local/bin/kubectl get apiservices v1beta1.metrics.k8s.io -o jsonpath=‘{.status.conditions[0].status}’\n”, “delta”: “0:00:02.124720”, “end”: “2020-03-22 15:17:04.371141”, “rc”: 0, “start”: “2020-03-22 15:17:02.246421”, “stderr”: "", “stderr_lines”: [], “stdout”: “False”, “stdout_lines”: [“False”]}

PLAY RECAP *********************************************************************

localhost : ok=7 changed=4 unreachable=0 failed=1 skipped=5 rescued=0 ignored=0

E0324 00:36:36.213124 1 reflector.go:283] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to watch *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&resourceVersion=1397&timeoutSeconds=427&watch=true: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:37.217749 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:38.219640 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:39.221926 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:40.223767 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:41.225314 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:42.227339 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:44.380782 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:45.382786 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:46.385192 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

E0324 00:36:47.387136 1 reflector.go:125] github.com/flant/shell-operator/pkg/kube_events_manager/kube_events_manager.go:354: Failed to list *unstructured.Unstructured: Get https://10.233.0.1:443/api/v1/namespaces/kubesphere-system/configmaps?fieldSelector=metadata.name%3Dks-installer&limit=500&resourceVersion=0: dial tcp 10.233.0.1:443: connect: connection refused

CauchyK零SK壹S

CauchyK零SK壹S

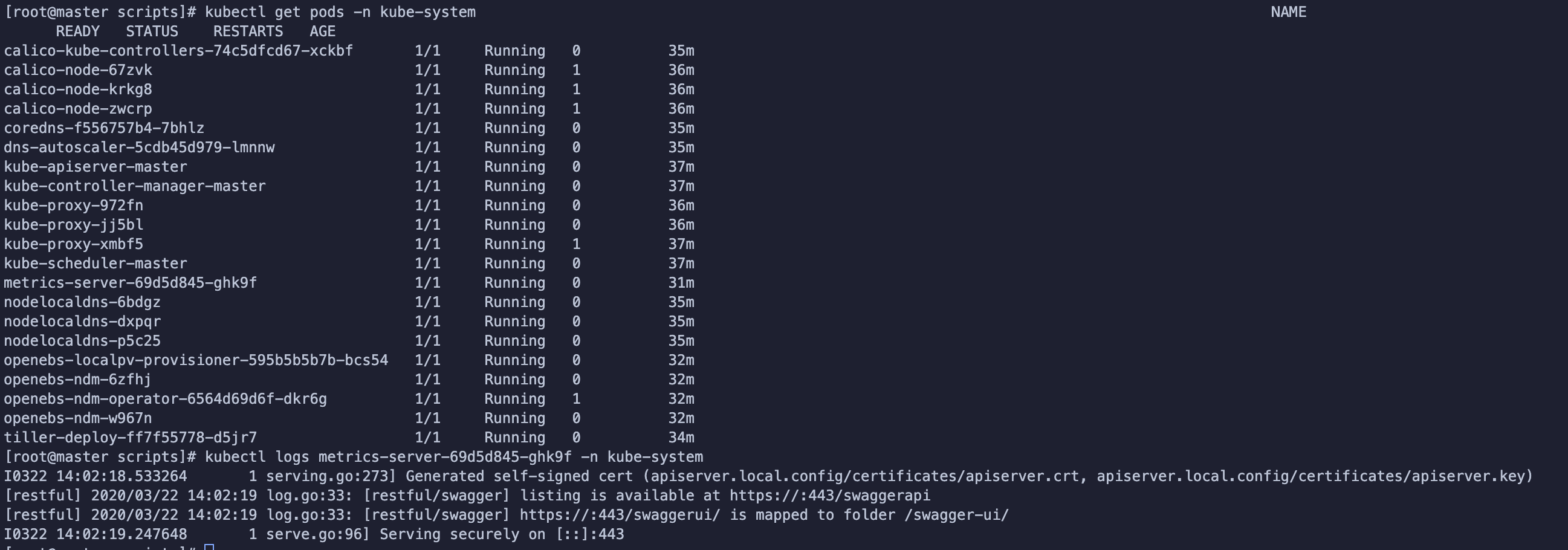

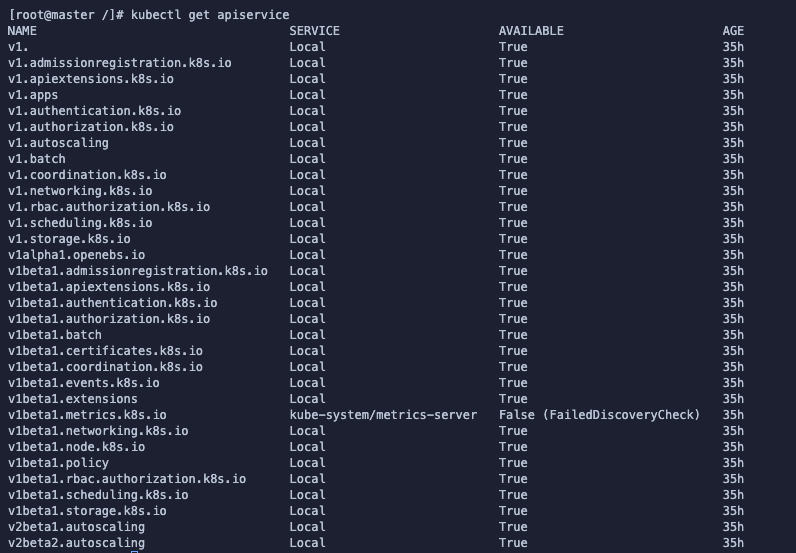

kubectl get apiservice检查下metrice的AVAILABLE是否为True,如果不是的话,可以把metrics-service相关的资源全部删掉,或者看看是不是你的k8s集群没有开api聚合

Jjhh452020K零S

Cauchy 怎么删除metrics-service相关的资源?

Jjhh452020K零S

- 已编辑

All-in-One 模式可以成功,Multi-Node 模式就失败

Raccoon-codeK零S

Raccoon-codeK零S

有没有离线安装2.1.0版本的教程啊

Raccoon-codeK零S

Raccoon-codeK零S

calvinyu

这是2.1.1的,我想要2.1.0的,搜不到啊,你能搜到吗?

calvinyuK零S

calvinyuK零S

Raccoon-code 不装 最新版的原因是什么?

FeynmanK零SK贰SK壹S

FeynmanK零SK贰SK壹S

Raccoon-code 2.1.1 是最新的 stable release

Raccoon-codeK零S

Raccoon-codeK零S

我使用离线安装的方式,安装了2.1.1(一个master两个node),部署的mysql正常使用。但是在部署微服务nacos的时候,使用kubesphere部署的nacos报连不到数据库的错误,在主机上使用命令启动一个nacos容器就能成功连到数据库。在之前部署的2.1.0的all-in-one模式部署nacos,连接multi-node上的数据库也能成功。所以我想用2.1.0部署个multi-node模式,对比一下是不是kubesphere2.1.1的问题。离线安装2.1.0的安装包又找不到。。。

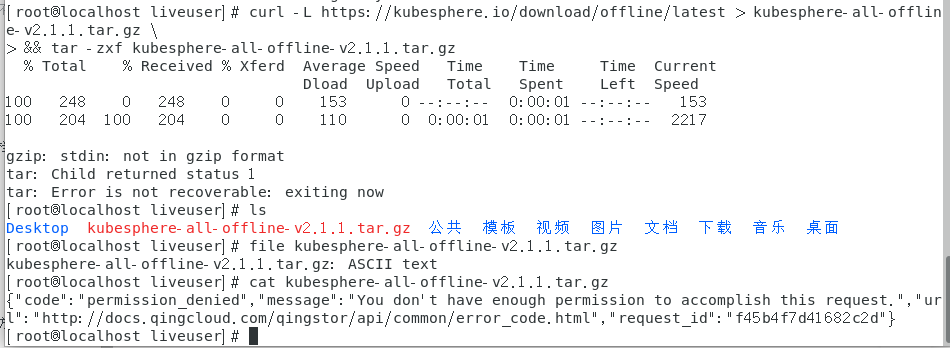

下载失败

{“code”:“permission_denied”,“message”:“You don’t have enough permission to accomplish this request.”,“url”:“http://docs.qingcloud.com/qingstor/api/common/error_code.html”,“request_id”:“f45b4f7d41682c2d”}

FeynmanK零SK贰SK壹S

FeynmanK零SK贰SK壹S

liujinhua_9512 现在应该修复了,请重新下载一次吧

- 已编辑

2020-03-31 17:07:36,104 p=17663 u=root | fatal: [node02 -> 10.8.230.11]: FAILED! => {

"attempts": 4,

"changed": true,

"cmd": [

"/usr/bin/docker",

"pull",

"10.8.230.11:5000/mirrorgooglecontainers/pause-amd64:3.1"

],

"delta": "0:00:00.063288",

"end": "2020-03-31 17:07:36.083266",

"rc": 1,

"start": "2020-03-31 17:07:36.019978"

}

STDERR:

Error response from daemon: Get https://10.8.230.11:5000/v2/: http: server gave HTTP response to HTTPS client

MSG:升级的时候出错了

master节点上的registry是http的

咋避开?

/etc/docker/registry # cat config.yml

version: 0.1

log:

fields:

service: registry

storage:

cache:

blobdescriptor: inmemory

filesystem:

rootdirectory: /var/lib/registry

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3你好,离线安装Multi-node模式出现这个错误:

CauchyK零SK壹S

CauchyK零SK壹S

我看报错找不到这个镜像,机器磁盘空间是不是比较小,导致镜像没有完全导入呢?可以检查下,然后删除scripts/os中的*.tmp文件重试。