操作系统信息

物理机,Ubuntu 18.04.4 LTS (GNU/Linux 4.9.293 aarch64)

Kubernetes版本信息

# kubectl version

Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.10", GitCommit:"eae22ba6238096f5dec1ceb62766e97783f0ba2f", GitTreeState:"clean", BuildDate:"2022-05-24T12:56:35Z", GoVersion:"go1.16.15", Compiler:"gc", Platform:"linux/arm64"}

Server Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.5", GitCommit:"6b1d87acf3c8253c123756b9e61dac642678305f", GitTreeState:"clean", BuildDate:"2021-03-18T01:02:01Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/arm64"}

WARNING: version difference between client (1.22) and server (1.20) exceeds the supported minor version skew of +/-1

容器运行时

# docker version

Client: Docker Engine - Community

Version: 20.10.18

API version: 1.41

Go version: go1.18.6

Git commit: b40c2f6

Built: Thu Sep 8 23:10:47 2022

OS/Arch: linux/arm64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.18

API version: 1.41 (minimum version 1.12)

Go version: go1.18.6

Git commit: e42327a

Built: Thu Sep 8 23:09:10 2022

OS/Arch: linux/arm64

Experimental: false

containerd:

Version: 1.6.8

GitCommit: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6

runc:

Version: 1.1.4

GitCommit: v1.1.4-0-g5fd4c4d

docker-init:

Version: 0.19.0

GitCommit: de40ad0

KubeSphere版本信息

v3.3.0。在线安装。在已有K8s上安装。

问题是什么

- default-http-backend 容器运行不起来

查看容器运行事件,检查该 image 版本,发现这个版本不是 ARM 版本的 image,请问是否有可替代的 image 可以使用

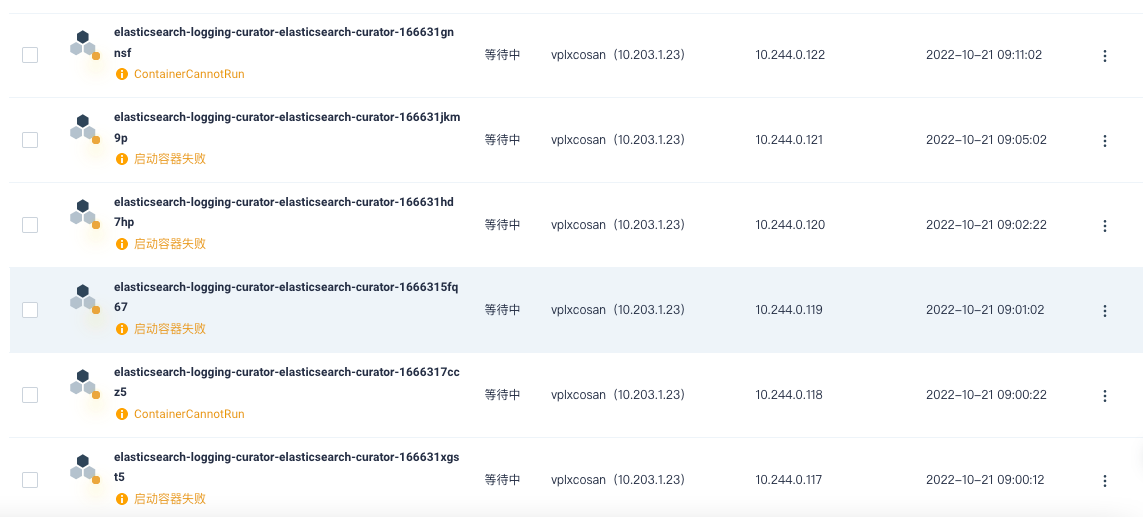

- elasticsearch-logging-curator-elasticsearch-curator 容器运行不起来

考虑到也是由于 image 版本不适用 ARM 架构导致出现的容器运行不起来,使用 kubesphere/elasticsearch-curator:v5.7.6-amd64 image 替换原来的 image,但是容器还是跑不起来

出现 RunContainerError 错误

# kubectl describe pod/elasticsearch-logging-curator-elasticsearch-curator-166631xgst5 -n kubesphere-logging-system

Name: elasticsearch-logging-curator-elasticsearch-curator-166631xgst5

Namespace: kubesphere-logging-system

Priority: 0

Node: vplxcosan/10.203.1.23

Start Time: Fri, 21 Oct 2022 09:00:12 +0800

Labels: app=elasticsearch-curator

controller-uid=6ef3497e-18eb-4a5a-b8e6-3229db156a9d

job-name=elasticsearch-logging-curator-elasticsearch-curator-1666314000

release=elasticsearch-logging-curator

Annotations: <none>

Status: Failed

IP: 10.244.0.117

IPs:

IP: 10.244.0.117

Controlled By: Job/elasticsearch-logging-curator-elasticsearch-curator-1666314000

Containers:

elasticsearch-curator:

Container ID: docker://b01c8b1d5ef7186b479e75c26cd72fdccb08d10642366b8935a5abb836dcb8ec

Image: kubesphere/elasticsearch-curator:v5.7.6

Image ID: docker-pullable://kubesphere/elasticsearch-curator@sha256:b95fc873e3bc4e1c3f3a9879c3ac9476d7105d085700e55465fe43033291e410

Port: <none>

Host Port: <none>

Command:

curator/curator

Args:

--config

/etc/es-curator/config.yml

/etc/es-curator/action_file.yml

State: Waiting

Reason: RunContainerError

Last State: Terminated

Exit Code: 0

Started: Mon, 01 Jan 0001 00:00:00 +0000

Finished: Mon, 01 Jan 0001 00:00:00 +0000

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/etc/es-curator from config-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-ng8lp (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: elasticsearch-logging-curator-elasticsearch-curator-config

Optional: false

default-token-ng8lp:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-ng8lp

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

出现 ContainerCannotRun 错误

# kubectl describe pod/elasticsearch-logging-curator-elasticsearch-curator-1666317ccz5 -n kubesphere-logging-system

Name: elasticsearch-logging-curator-elasticsearch-curator-1666317ccz5

Namespace: kubesphere-logging-system

Priority: 0

Node: vplxcosan/10.203.1.23

Start Time: Fri, 21 Oct 2022 09:00:22 +0800

Labels: app=elasticsearch-curator

controller-uid=6ef3497e-18eb-4a5a-b8e6-3229db156a9d

job-name=elasticsearch-logging-curator-elasticsearch-curator-1666314000

release=elasticsearch-logging-curator

Annotations: <none>

Status: Failed

IP: 10.244.0.118

IPs:

IP: 10.244.0.118

Controlled By: Job/elasticsearch-logging-curator-elasticsearch-curator-1666314000

Containers:

elasticsearch-curator:

Container ID: docker://c6fb4aca753d6d6804b22ff567fb1aaabf3b07ef518d0936c6604fdb6fd43267

Image: kubesphere/elasticsearch-curator:v5.7.6

Image ID: docker-pullable://kubesphere/elasticsearch-curator@sha256:b95fc873e3bc4e1c3f3a9879c3ac9476d7105d085700e55465fe43033291e410

Port: <none>

Host Port: <none>

Command:

curator/curator

Args:

--config

/etc/es-curator/config.yml

/etc/es-curator/action_file.yml

State: Terminated

Reason: ContainerCannotRun

Message: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "curator/curator": stat curator/curator: no such file or directory: unknown

Exit Code: 127

Started: Fri, 21 Oct 2022 09:00:24 +0800

Finished: Fri, 21 Oct 2022 09:00:24 +0800

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/etc/es-curator from config-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-ng8lp (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: elasticsearch-logging-curator-elasticsearch-curator-config

Optional: false

default-token-ng8lp:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-ng8lp

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

检查 pod log,没有信息

# kubectl logs pod/elasticsearch-logging-curator-elasticsearch-curator-1666317ccz5 -n kubesphere-logging-system

# kubectl logs pod/elasticsearch-logging-curator-elasticsearch-curator-166631xgst5 -n kubesphere-logging-system