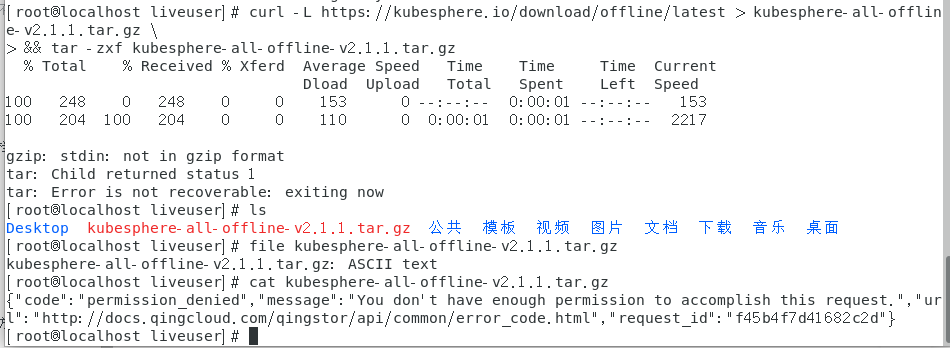

下载失败

{“code”:“permission_denied”,“message”:“You don’t have enough permission to accomplish this request.”,“url”:“http://docs.qingcloud.com/qingstor/api/common/error_code.html”,“request_id”:“f45b4f7d41682c2d”}

离线安装 KubeSphere 2.1.1 与 Kubernetes

FeynmanK零SK贰SK壹S

FeynmanK零SK贰SK壹S

liujinhua_9512 现在应该修复了,请重新下载一次吧

- 已编辑

2020-03-31 17:07:36,104 p=17663 u=root | fatal: [node02 -> 10.8.230.11]: FAILED! => {

"attempts": 4,

"changed": true,

"cmd": [

"/usr/bin/docker",

"pull",

"10.8.230.11:5000/mirrorgooglecontainers/pause-amd64:3.1"

],

"delta": "0:00:00.063288",

"end": "2020-03-31 17:07:36.083266",

"rc": 1,

"start": "2020-03-31 17:07:36.019978"

}

STDERR:

Error response from daemon: Get https://10.8.230.11:5000/v2/: http: server gave HTTP response to HTTPS client

MSG:升级的时候出错了

master节点上的registry是http的

咋避开?

/etc/docker/registry # cat config.yml

version: 0.1

log:

fields:

service: registry

storage:

cache:

blobdescriptor: inmemory

filesystem:

rootdirectory: /var/lib/registry

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3你好,离线安装Multi-node模式出现这个错误:

CauchyK零SK壹S

CauchyK零SK壹S

我看报错找不到这个镜像,机器磁盘空间是不是比较小,导致镜像没有完全导入呢?可以检查下,然后删除scripts/os中的*.tmp文件重试。

Sseal82K零S

- 已编辑

安装失败,这几个环境包安装不上

本地apt_repo里,这几个软件都存在

当手动挨个尝试的时候发现

python3-software-properties依赖python3-pycurl,而在pip_repo文件夹中也是有python3-pycurl这个包的

这就说明这个

python3-pycurl并没有被正确安装啊,而且,这个是python3的依赖包,但是安装程序里好像根本没有安装pip3,只有pip27

CauchyK零SK壹S

CauchyK零SK壹S- 已编辑

seal82

系统应该不是官方纯净版新安装的吧,这个看起来是依赖冲突问题,apt的依赖问题可以用aptitude尝试排查处理

https://blog.csdn.net/Davidietop/article/details/88934783

Sseal82K零S

Cauchy 我是纯净的ubuntu,没有装任何东西

- 不知道为什么发不了图片了,我文字描述一下吧

- 错误显示是

software-properties-common需要依赖python3-software-properties,而在/kubeinstaller/apt_repo/16.04.6/iso中是有python3-software-properties的,于是我尝试手动dpkg -i安装这个包,结果报错说需要依赖python3-pycurl这个包,于是我又到/kubeinstaller/pip_repo/pip27/iso路径下,找到了pycurl-7.19.0.tar.gz这个包,当我手动sudo tar解压的却提示我这个tar包里面的文件都是只读文件,不能解压。这就产生了两个问题 - 1.这是个python3的依赖包,但是

/kubeinstaller/pip_repo/路径下只有pip27文件夹,却没有pip3的文件夹,说明没有pip3的本地资源库 - 2.就算内部机制让python3的本地资源库可以共用pip27文件夹,但是

pycurl-7.19.0.tar.gz这个压缩包确是个完全只读文件,根本打不开

FeynmanK零SK贰SK壹S

FeynmanK零SK贰SK壹S

大家如果使用离线 Installer 安装不顺利,建议先自己安装好 k8s,然后参考 在 K8s 离线安装 KubeSphere,这样会很方便,也可以避免 OS 缺少依赖包的问题。

- 已编辑

centos 7.5 三节点安装,报错如下

TASK [prepare/nodes : GlusterFS | Installing glusterfs-client (YUM)] ********************************************************************************************************************************

Tuesday 14 April 2020 19:30:35 +0800 (0:00:00.429) 0:00:47.080 *********

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (5 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (5 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (5 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (4 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (4 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (4 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (3 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (3 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (3 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (2 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (2 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (2 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (1 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (1 retries left).

FAILED - RETRYING: GlusterFS | Installing glusterfs-client (YUM) (1 retries left).

fatal: [node2]: FAILED! => {

"attempts": 5,

"changed": false,

"rc": 1,

"results": [

"Loaded plugins: langpacks\nResolving Dependencies\n--> Running transaction check\n---> Package glusterfs-fuse.x86_64 0:3.12.2-47.2.el7 will be installed\n--> Processing Dependency: glusterfs-libs(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Processing Dependency: glusterfs-client-xlators(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Processing Dependency: glusterfs(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Running transaction check\n---> Package glusterfs.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-api-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs.x86_64 0:3.12.2-47.2.el7 will be an update\n---> Package glusterfs-client-xlators.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-api-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs-client-xlators.x86_64 0:3.12.2-47.2.el7 will be an update\n---> Package glusterfs-libs.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs-libs(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-cli-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs-libs.x86_64 0:3.12.2-47.2.el7 will be an update\n--> Finished Dependency Resolution\n You could try using --skip-broken to work around the problem\n You could try running: rpm -Va --nofiles --nodigest\n"

]

}

MSG:

Error: Package: glusterfs-api-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-3.12.2-47.2.el7.x86_64 (bash)

glusterfs(x86-64) = 3.12.2-47.2.el7

Error: Package: glusterfs-cli-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs-libs(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-libs-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs-libs(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-libs-3.12.2-47.2.el7.x86_64 (bash)

glusterfs-libs(x86-64) = 3.12.2-47.2.el7

Error: Package: glusterfs-api-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-client-xlators-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-client-xlators-3.12.2-47.2.el7.x86_64 (bash)

glusterfs-client-xlators(x86-64) = 3.12.2-47.2.el7

fatal: [node1]: FAILED! => {

"attempts": 5,

"changed": false,

"rc": 1,

"results": [

"Loaded plugins: langpacks\nResolving Dependencies\n--> Running transaction check\n---> Package glusterfs-fuse.x86_64 0:3.12.2-47.2.el7 will be installed\n--> Processing Dependency: glusterfs-libs(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Processing Dependency: glusterfs-client-xlators(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Processing Dependency: glusterfs(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Running transaction check\n---> Package glusterfs.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-api-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs.x86_64 0:3.12.2-47.2.el7 will be an update\n---> Package glusterfs-client-xlators.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-api-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs-client-xlators.x86_64 0:3.12.2-47.2.el7 will be an update\n---> Package glusterfs-libs.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs-libs(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-cli-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs-libs.x86_64 0:3.12.2-47.2.el7 will be an update\n--> Finished Dependency Resolution\n You could try using --skip-broken to work around the problem\n You could try running: rpm -Va --nofiles --nodigest\n"

]

}

MSG:

Error: Package: glusterfs-api-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-3.12.2-47.2.el7.x86_64 (bash)

glusterfs(x86-64) = 3.12.2-47.2.el7

Error: Package: glusterfs-cli-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs-libs(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-libs-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs-libs(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-libs-3.12.2-47.2.el7.x86_64 (bash)

glusterfs-libs(x86-64) = 3.12.2-47.2.el7

Error: Package: glusterfs-api-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-client-xlators-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-client-xlators-3.12.2-47.2.el7.x86_64 (bash)

glusterfs-client-xlators(x86-64) = 3.12.2-47.2.el7

fatal: [master]: FAILED! => {

"attempts": 5,

"changed": false,

"rc": 1,

"results": [

"Loaded plugins: langpacks\nResolving Dependencies\n--> Running transaction check\n---> Package glusterfs-fuse.x86_64 0:3.12.2-47.2.el7 will be installed\n--> Processing Dependency: glusterfs-libs(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Processing Dependency: glusterfs-client-xlators(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Processing Dependency: glusterfs(x86-64) = 3.12.2-47.2.el7 for package: glusterfs-fuse-3.12.2-47.2.el7.x86_64\n--> Running transaction check\n---> Package glusterfs.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-api-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs.x86_64 0:3.12.2-47.2.el7 will be an update\n---> Package glusterfs-client-xlators.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-api-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs-client-xlators.x86_64 0:3.12.2-47.2.el7 will be an update\n---> Package glusterfs-libs.x86_64 0:3.8.4-53.el7.centos will be updated\n--> Processing Dependency: glusterfs-libs(x86-64) = 3.8.4-53.el7.centos for package: glusterfs-cli-3.8.4-53.el7.centos.x86_64\n---> Package glusterfs-libs.x86_64 0:3.12.2-47.2.el7 will be an update\n--> Finished Dependency Resolution\n You could try using --skip-broken to work around the problem\n** Found 1 pre-existing rpmdb problem(s), 'yum check' output follows:\npython-requests-2.6.0-7.el7_7.noarch has missing requires of python-urllib3 >= ('0', '1.10.2', '1')\n"

]

}

MSG:

Error: Package: glusterfs-api-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-3.12.2-47.2.el7.x86_64 (bash)

glusterfs(x86-64) = 3.12.2-47.2.el7

Error: Package: glusterfs-cli-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs-libs(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-libs-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs-libs(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-libs-3.12.2-47.2.el7.x86_64 (bash)

glusterfs-libs(x86-64) = 3.12.2-47.2.el7

Error: Package: glusterfs-api-3.8.4-53.el7.centos.x86_64 (@anaconda)

Requires: glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos

Removing: glusterfs-client-xlators-3.8.4-53.el7.centos.x86_64 (@anaconda)

glusterfs-client-xlators(x86-64) = 3.8.4-53.el7.centos

Updated By: glusterfs-client-xlators-3.12.2-47.2.el7.x86_64 (bash)

glusterfs-client-xlators(x86-64) = 3.12.2-47.2.el7

PLAY RECAP ******************************************************************************************************************************************************************************************

master : ok=8 changed=4 unreachable=0 failed=1

node1 : ok=9 changed=7 unreachable=0 failed=1

node2 : ok=9 changed=7 unreachable=0 failed=1

Tuesday 14 April 2020 19:32:30 +0800 (0:01:54.751) 0:02:41.831 *********

===============================================================================

prepare/nodes : GlusterFS | Installing glusterfs-client (YUM) ------------------------------------------------------------------------------------------------------------------------------ 114.75s

prepare/nodes : Ceph RBD | Installing ceph-common (YUM) ------------------------------------------------------------------------------------------------------------------------------------- 22.55s

prepare/nodes : KubeSphere| Installing JQ (YUM) ---------------------------------------------------------------------------------------------------------------------------------------------- 6.85s

prepare/nodes : Copy admin kubeconfig to root user home -------------------------------------------------------------------------------------------------------------------------------------- 2.89s

download : Download items -------------------------------------------------------------------------------------------------------------------------------------------------------------------- 2.38s

download : Sync container -------------------------------------------------------------------------------------------------------------------------------------------------------------------- 1.95s

prepare/nodes : Install kubectl bash completion ---------------------------------------------------------------------------------------------------------------------------------------------- 1.54s

prepare/nodes : Create kube config dir ------------------------------------------------------------------------------------------------------------------------------------------------------- 1.43s

prepare/nodes : Set kubectl bash completion file --------------------------------------------------------------------------------------------------------------------------------------------- 1.21s

fetch config --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 1.08s

modify resolv.conf --------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 1.01s

prepare/nodes : Fetch /etc/os-release -------------------------------------------------------------------------------------------------------------------------------------------------------- 0.71s

Get the tag date ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 0.59s

modify resolv.conf --------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 0.44s

prepare/nodes : Ceph RBD | Installing ceph-common (APT) -------------------------------------------------------------------------------------------------------------------------------------- 0.43s

download : include_tasks --------------------------------------------------------------------------------------------------------------------------------------------------------------------- 0.43s

prepare/nodes : GlusterFS | Installing glusterfs-client (APT) -------------------------------------------------------------------------------------------------------------------------------- 0.43s

prepare/nodes : KubeSphere | Installing JQ (APT) --------------------------------------------------------------------------------------------------------------------------------------------- 0.34s

kubesphere-defaults : Configure defaults ----------------------------------------------------------------------------------------------------------------------------------------------------- 0.28s

set dev version ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 0.27s

failed!

**********************************

please refer to https://kubesphere.io/docs/v2.1/zh-CN/faq/faq-install/

**********************************Kks-5937K零S

kubesphere-all-offline-v2.1.1.tar.gz 离线包 看来少了 flannel 的镜像哦, 只能默认 calico ?

或者有补救方法吗? flannel 需要哪些镜像,手动补回去。

2020-04-18 13:09:24,698 p=16068 u=xxxxxx | TASK [download : download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 )] *******************************************************************

2020-04-18 13:09:24,698 p=16068 u=xxxxxx | Saturday 18 April 2020 13:09:24 +0800 (0:00:00.115) 0:01:46.334 ********

2020-04-18 13:09:24,909 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (4 retries left).

2020-04-18 13:09:24,978 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (4 retries left).

2020-04-18 13:09:25,020 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (4 retries left).

2020-04-18 13:09:31,195 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (3 retries left).

2020-04-18 13:09:32,151 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (3 retries left).

2020-04-18 13:09:33,086 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (3 retries left).

2020-04-18 13:09:37,372 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (2 retries left).

2020-04-18 13:09:39,327 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (2 retries left).

2020-04-18 13:09:41,250 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (2 retries left).

2020-04-18 13:09:43,558 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (1 retries left).

2020-04-18 13:09:46,493 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (1 retries left).

2020-04-18 13:09:49,409 p=16068 u=xxxxxx | FAILED - RETRYING: download_container | Download image if required ( 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 ) (1 retries left).

2020-04-18 13:09:49,759 p=16068 u=xxxxxx | fatal: [k8s-m-202 -> k8s-m-202]: FAILED! => {

“attempts”: 4,

“changed”: true,

“cmd”: [

“/usr/bin/docker”,

“pull”,

“192.168.1.202:5000/coreos/flannel-cni:v0.3.0”

],

“delta”: “0:00:00.052470″,

“end”: “2020-04-18 13:09:49.745324″,

“rc”: 1,

“start”: “2020-04-18 13:09:49.692854”

}

STDERR:

Error response from daemon: manifest for 192.168.1.202:5000/coreos/flannel-cni:v0.3.0 not found

MSG:

non-zero return code