操作系统信息

aws ec2 linux

Kubernetes版本信息

v1.24.8-eks-ffeb93d

容器运行时

containerd

KubeSphere版本信息

v3.3.2。在aws 的 eks K8s上安装。

问题是什么

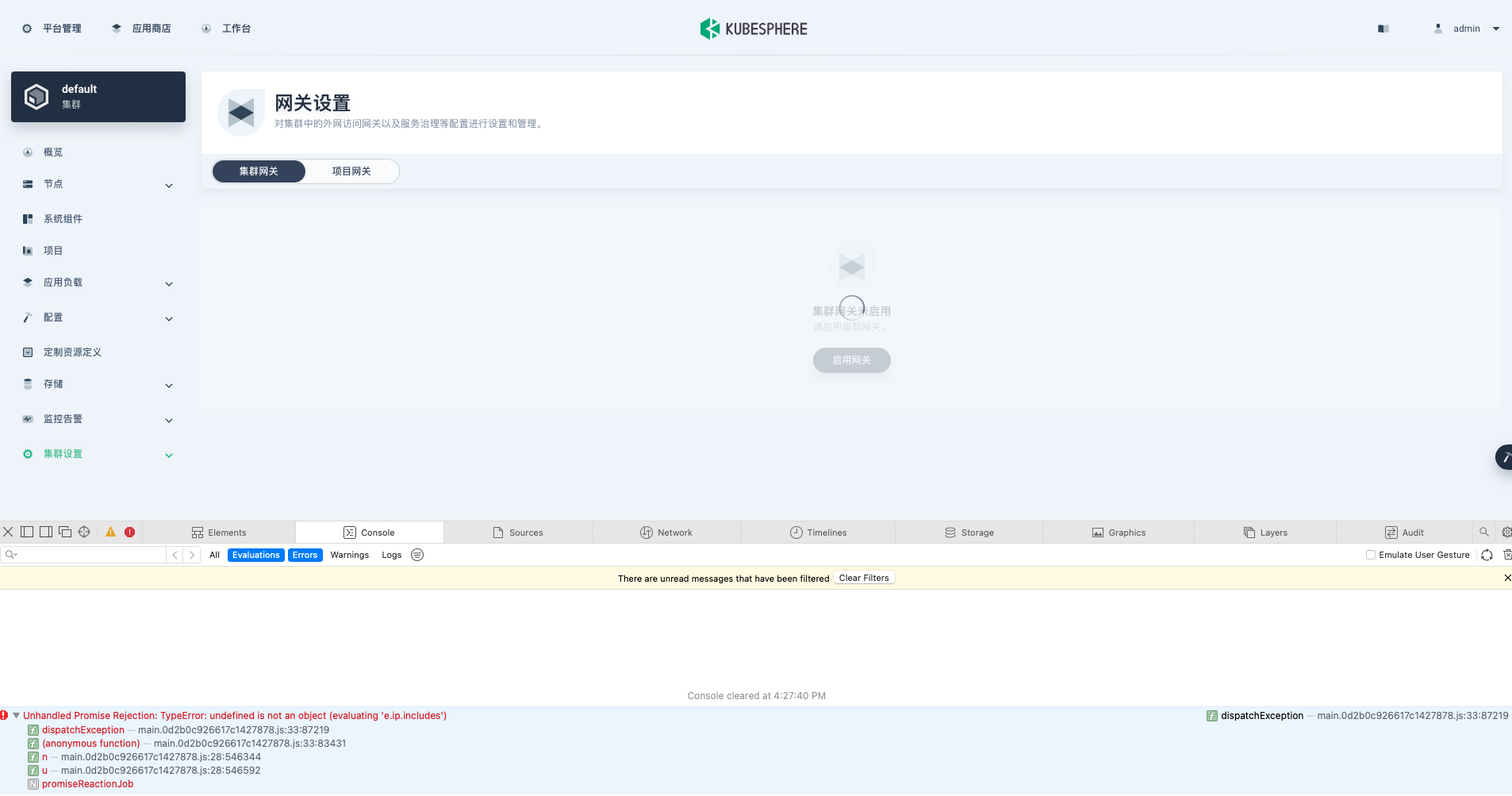

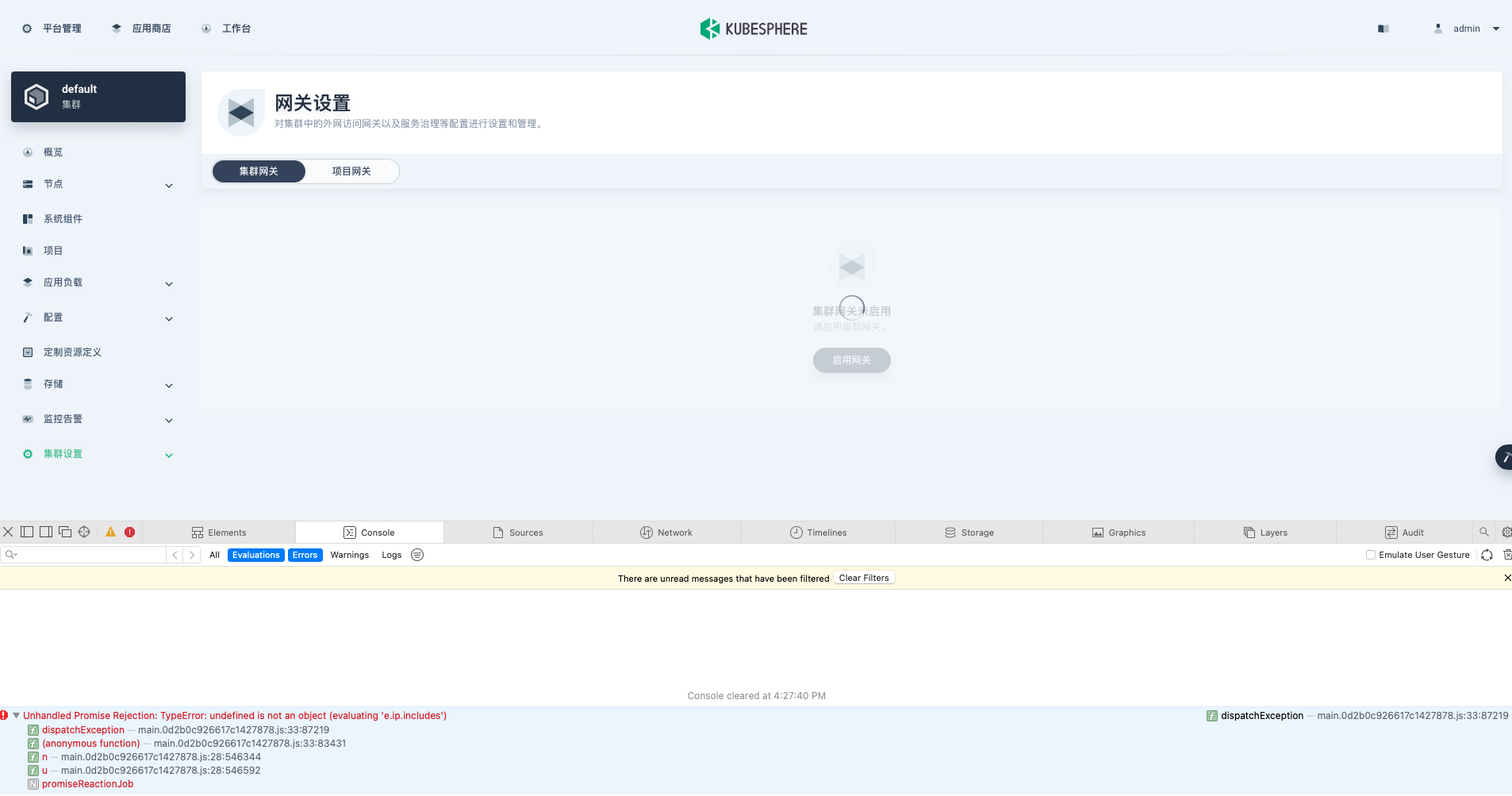

很久以前启用过【集群网关】,配置的是 AWS 的 NLB,平时发布路由的时候也都正常。就今天突然觉得访问速度有点慢,就想查询下【集群网关】页面看看配置,结果该页面一直转转转,开发者工具报下面的错误:

操作系统信息

aws ec2 linux

Kubernetes版本信息

v1.24.8-eks-ffeb93d

容器运行时

containerd

KubeSphere版本信息

v3.3.2。在aws 的 eks K8s上安装。

问题是什么

很久以前启用过【集群网关】,配置的是 AWS 的 NLB,平时发布路由的时候也都正常。就今天突然觉得访问速度有点慢,就想查询下【集群网关】页面看看配置,结果该页面一直转转转,开发者工具报下面的错误:

Unhandled Promise Rejection: TypeError: undefined is not an object (evaluating 'e.ip.includes')看起来像是这种环境下的 ks 前端里没处理好。如果之前是正常的话,可以查下现在 NLB 中配置是否有更新, NLB 是否正常。

感谢大佬回复,之前是正常的,请问是查询KS 中的 NLB 配置是否有更新么,如果是的话,在哪里可以找到 KS 中配置的 NLB 信息?

我查了 AWS 上的 NLB 配置,都是正常的,也没有人动过。

有大佬帮忙看看么?

急急急~

前端的报错可能是字段解析异常,不影响功能,可以先查看下相关资源

kubectl get gateway.gateway.kubesphere.io -A

感谢大佬回复,现在前端看不到页面内容,已经影响我们使用了,无法查看到一些 ingress 的数据。当然,流量是正常的。

执行该命令后,结果如下:

kubectl get gateway.gateway.kubesphere.io -A

NAMESPACE NAME AGE

kubesphere-controls-system kubesphere-router-kubesphere-system 196d试一下直接访问gateway的监控页面呢 /gateways/cluster/kubesphere-router-kubesphere-system/monitors,看看直接访问它的详情页监控。

然后你将gateway的yaml 贴一下,看看哪里解析出错了。

frezes 直接访问 gateway 的监控页面URL,和之前访问集群网关是一样的,报的错误也是一样的。

gateway的 yml 如下

apiVersion: v1

items:

- apiVersion: gateway.kubesphere.io/v1alpha1

kind: Gateway

metadata:

annotations:

kubesphere.io/annotations: Amazon EKS

kubesphere.io/creator: admin

creationTimestamp: "2022-09-04T10:41:44Z"

finalizers:

- uninstall-helm-release

generation: 2

name: kubesphere-router-kubesphere-system

namespace: kubesphere-controls-system

resourceVersion: "819254"

uid: eae99de7-2671-4265-927c-cba926a3ab13

spec:

controller:

replicas: 1

scope: {}

deployment:

annotations:

servicemesh.kubesphere.io/enabled: "true"

replicas: 2

service:

annotations:

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: instance

"service.beta.kubernetes.io/aws-load-balancer-type\t": nlb

type: LoadBalancer

status:

conditions:

- lastTransitionTime: "2022-09-04T10:41:45Z"

message: release was successfully upgraded

reason: UpgradeSuccessful

status: "True"

type: Deployed

- lastTransitionTime: "2022-09-04T10:41:45Z"

status: "True"

type: Initialized

- lastTransitionTime: "2022-09-04T10:41:45Z"

status: "False"

type: Irreconcilable

- lastTransitionTime: "2022-09-04T10:41:45Z"

status: "False"

type: ReleaseFailed

deployedRelease:

manifest: |

---

apiVersion: gateway.kubesphere.io/v1alpha1

kind: Nginx

metadata:

name: kubesphere-router-kubesphere-system-ingress

namespace: kubesphere-controls-system

ownerReferences:

- apiVersion: gateway.kubesphere.io/v1alpha1

blockOwnerDeletion: true

controller: true

kind: Gateway

name: kubesphere-router-kubesphere-system

uid: eae99de7-2671-4265-927c-cba926a3ab13

spec:

controller:

addHeaders: {}

admissionWebhooks:

enabled: false

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- ingress-nginx

- key: app.kubernetes.io/instance

operator: In

values:

- kubesphere-router-kubesphere-system-ingress

- key: app.kubernetes.io/component

operator: In

values:

- controller

topologyKey: kubernetes.io/hostname

weight: 100

annotations:

servicemesh.kubesphere.io/enabled: "true"

autoscaling:

enabled: false

maxReplicas: 11

minReplicas: 1

targetCPUUtilizationPercentage: 50

targetMemoryUtilizationPercentage: 50

configAnnotations: {}

configMapNamespace: ""

customTemplate:

configMapKey: ""

configMapName: ""

dnsConfig: {}

electionID: ingress-controller-leader-kubesphere-router-kubesphere-system

extraArgs: {}

extraEnvs: []

image:

digest: ""

pullPolicy: IfNotPresent

repository: kubesphere/nginx-ingress-controller

tag: v1.1.0

ingressClass: nginx

ingressClassResource:

default: false

enabled: false

parameters: {}

kind: Deployment

labels: {}

metrics:

enabled: true

port: 10254

prometheusRule:

enabled: false

serviceMonitor:

enabled: true

minAvailable: 1

name: ""

podAnnotations:

sidecar.istio.io/inject: true

podLabels: {}

proxySetHeaders: {}

publishService:

enabled: true

replicaCount: 2

reportNodeInternalIp: false

resources:

requests:

cpu: 100m

memory: 90Mi

service:

annotations:

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: instance

"service.beta.kubernetes.io/aws-load-balancer-type\t": nlb

enabled: true

externalIPs: []

labels: {}

loadBalancerSourceRanges: []

nodePorts:

http: ""

https: ""

tcp: {}

udp: {}

type: LoadBalancer

tcp:

annotations: {}

configMapNamespace: ""

tolerations: []

topologySpreadConstraints: []

udp:

annotations: {}

configMapNamespace: ""

watchIngressWithoutClass: true

fullnameOverride: kubesphere-router-kubesphere-system

imagePullSecrets: []

name: kubesphere-router-kubesphere-system

kind: List

metadata:

resourceVersion: ""koalawangyang

你再检查下 aws LB 相关的配置是否正确,云负载均衡器驱动包配置是否正确。

前端报这个错误是因为这种情况下没有 gateway 的 status.loadBalancer 值,在 LB 访问模式下这个值是直接用的对应 svc 的 status.loadBalancer ,也就是云服务供应商没有为该负载均衡器分配 IP 地址。所以需要再确认一下 LB 相关的配置和驱动包。

hongzhouzi 大佬,aws LB 的配置从未人工修改过,都是通过 KS 直接添加路由来更新的。而且更新版本之前是好的,就是更新 ks 版本到 v3.3.2 后才出现的

koalawangyang

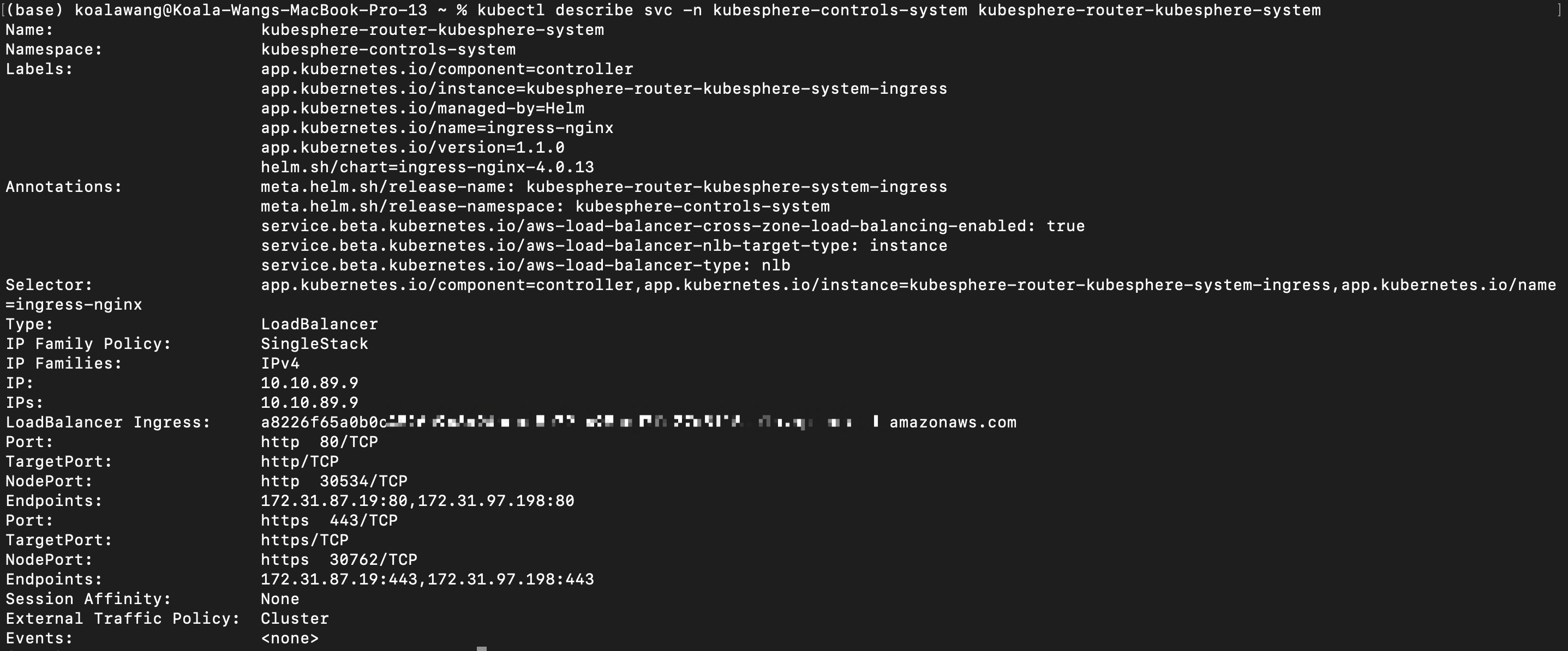

你 kubectl describe svc -n kubesphere-controls-system kubesphere-router-kubesphere-system 看看能看到 LoadBalancer Ingress 这项不呢

hongzhouzi 大佬,执行该命令可以看到 Ingress 的信息,与实际的相符。

koalawangyang

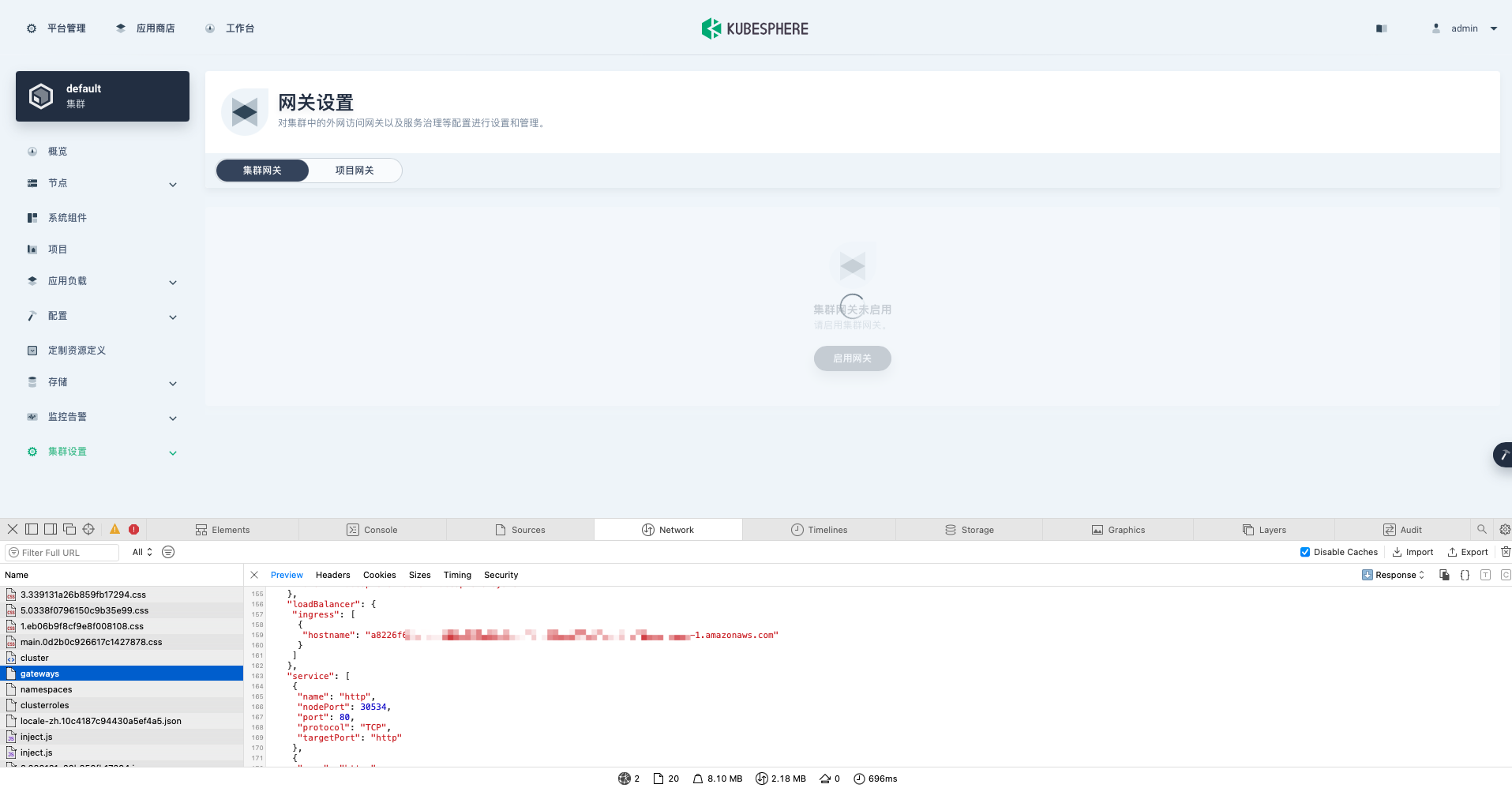

OK,那这儿没问题。在网关页面看看请求 /kapis/gateway.kubesphere.io/v1alpha1/namespaces/kubesphere-system/gateways API 返回的网关数据中 status.loadBalancer 有无数据呢

hongzhouzi 感谢大佬回复,有数据的,与实际 LB 的数据相符。

koalawangyang

应该是前端获取数据的问题,我提了个 issue 跟踪了下,应该在后面版本会修复这个问题。

hongzhouzi 感谢大佬,如果方便的话,可以告诉我大概什么版本会修复么,或者修复的时候可以回复我一下么?多谢多谢~

koalawangyang 方便加个微信或者qq不?我也在EKS上使用 kubesphere,遇到了和你一样的问题,后面一起交流下。

koalawangyang 大佬 这个问题解决了吗? 我也是eks整合的ks,现在开启集群网关loadbalabcer之后 就再也进不去集群网关页面了,监控的连接跳转也无效