- 已编辑

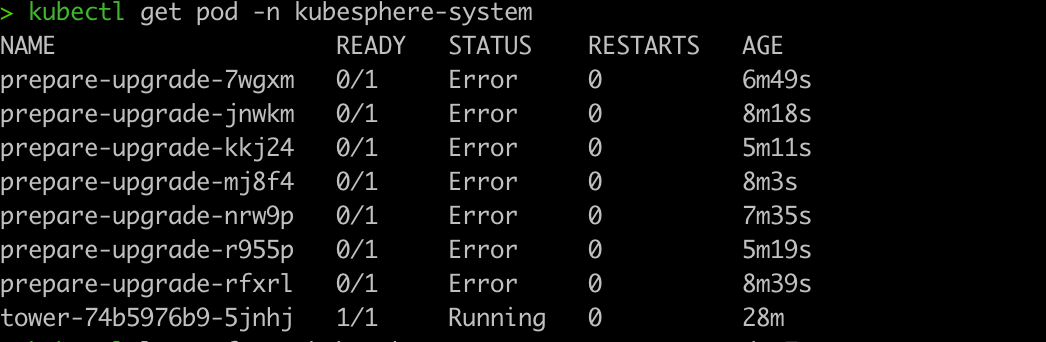

升级中有报错,这种资源没有被helm 管理的

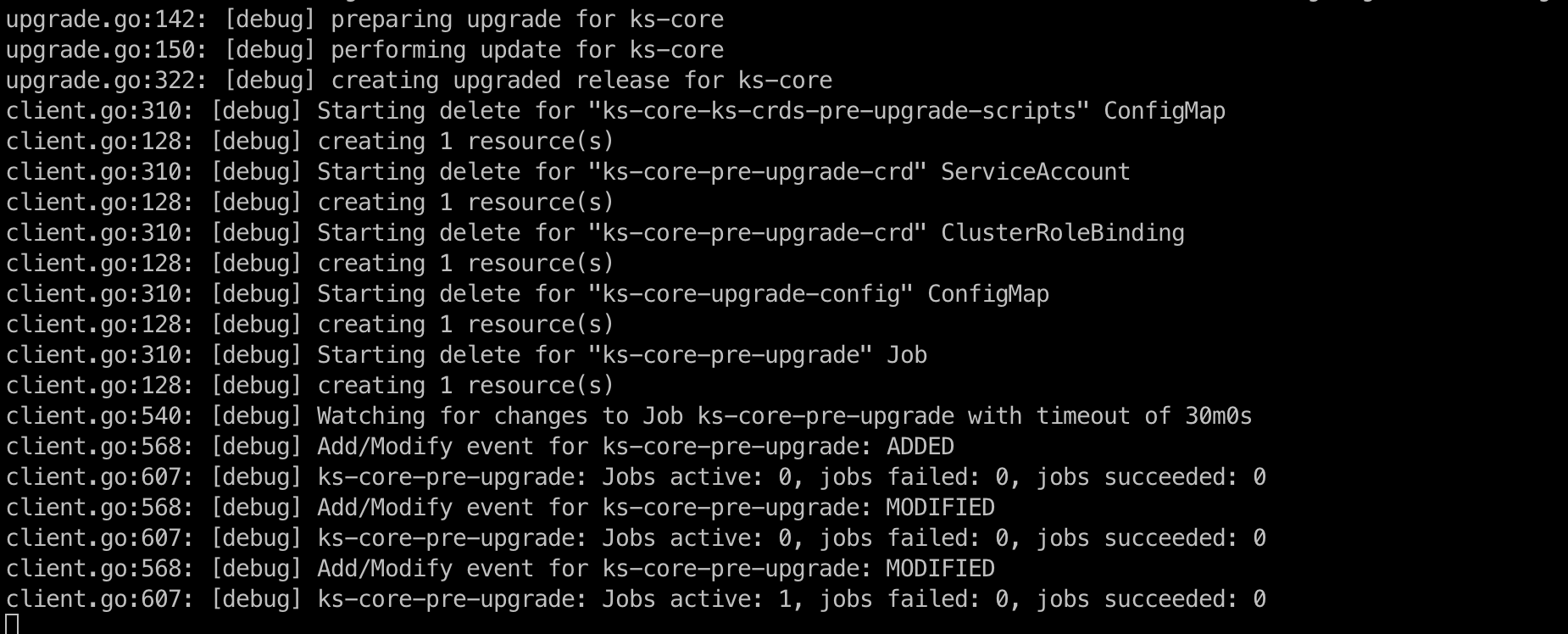

upgrade.go:142: [debug] preparing upgrade for ks-core

upgrade.go:150: [debug] performing update for ks-core

Error: UPGRADE FAILED: rendered manifests contain a resource that already exists. Unable to continue with update: GlobalRole "anonymous" in namespace "" exists and cannot be imported into the current release: invalid ownership metadata; label validation error: missing key "app.kubernetes.io/managed-by": must be set to "Helm"; annotation validation error: missing key "meta.helm.sh/release-name": must be set to "ks-core"; annotation validation error: missing key "meta.helm.sh/release-namespace": must be set to "kubesphere-system"

helm.go:84: [debug] GlobalRole "anonymous" in namespace "" exists and cannot be imported into the current release: invalid ownership metadata; label validation error: missing key "app.kubernetes.io/managed-by": must be set to "Helm"; annotation validation error: missing key "meta.helm.sh/release-name": must be set to "ks-core"; annotation validation error: missing key "meta.helm.sh/release-namespace": must be set to "kubesphere-system"

rendered manifests contain a resource that already exists. Unable to continue with update

helm.sh/helm/v3/pkg/action.(\*Upgrade).performUpgrade

helm.sh/helm/v3/pkg/action/upgrade.go:301

helm.sh/helm/v3/pkg/action.(\*Upgrade).RunWithContext

helm.sh/helm/v3/pkg/action/upgrade.go:151

main.newUpgradeCmd.func2

helm.sh/helm/v3/cmd/helm/upgrade.go:199

github.com/spf13/cobra.(\*Command).execute

github.com/spf13/cobra@v1.5.0/command.go:872

github.com/spf13/cobra.(\*Command).ExecuteC

github.com/spf13/cobra@v1.5.0/command.go:990

github.com/spf13/cobra.(\*Command).Execute

github.com/spf13/cobra@v1.5.0/command.go:918

main.main

helm.sh/helm/v3/cmd/helm/helm.go:83

runtime.main

runtime/proc.go:250

runtime.goexit

runtime/asm_arm64.s:1172

UPGRADE FAILED

main.newUpgradeCmd.func2

helm.sh/helm/v3/cmd/helm/upgrade.go:201

github.com/spf13/cobra.(\*Command).execute

github.com/spf13/cobra@v1.5.0/command.go:872

github.com/spf13/cobra.(\*Command).ExecuteC

github.com/spf13/cobra@v1.5.0/command.go:990

github.com/spf13/cobra.(\*Command).Execute

github.com/spf13/cobra@v1.5.0/command.go:918

main.main

helm.sh/helm/v3/cmd/helm/helm.go:83

runtime.main

runtime/proc.go:250

runtime.goexit

runtime/asm_arm64.s:1172